Introduction

Some mornings it feels as if every major headline is about AI. For leaders trying to act on ai news today, the hard part is not finding information; it is deciding what actually matters. A single press release can move budgets, change risk profiles, or rewrite a product roadmap.

We see the same pattern in every briefing with CIOs, CISOs, and operations leaders. There is an endless stream of model launches, chip announcements, and funding rounds, yet very little signal on what really counts for security, costs, and competitive position. It is easy to overreact to noise or, worse, miss a quiet change that reshapes an entire market.

That is why we group the most important ai news today into a few strategic themes. Enterprise AI is moving from simple chatbots to autonomous agents. Hardware and infrastructure are under pressure from a global compute crunch. Lawmakers and courts are rewriting what is allowed. Venture capital is flooding the field while warning signs of an AI bubble grow louder.

At VibeAutomateAI, we focus on what these shifts mean in practice. We connect headlines with clear actions on adoption, automation, cybersecurity, and governance. By the end of this article, we will have walked through the key developments that should guide enterprise AI strategy through 2025, and how to turn fast‑moving news into concrete plans across IT, security, and operations.

As Andrew Ng has put it, “AI is the new electricity”—it seeps into every industry once the right infrastructure and governance are in place.

Key Takeaways

Staying on top of ai news today is hard, so this section gives a fast briefing before we dive deeper.

- Enterprise AI is moving from simple chatbots to frontier agents that can run long, multi-step tasks on their own. Tools like AWS’s Kiro show how coding work can continue for days with only light human checks. This shift raises questions about team structure, software life cycles, and risk controls. Early movers will gain speed but also need stronger oversight.

- Cloud providers are spending billions building their own AI chips to compete with Nvidia. Amazon reports that its in-house silicon is already a multibillion-dollar business. Micron is steering production toward high-bandwidth memory to serve AI data centers. These moves change long-term cost curves and supply risk for enterprises.

- Meta has reversed its stance on news content and is signing multiyear data deals with outlets such as CNN, Fox News, and Le Monde. Meta AI can now answer current-event questions using licensed, linked content. This points toward a broader industry move where high-quality training data is paid for rather than scraped.

- Manufacturing executives say nearly half of their modernization budgets are going to AI, with clear targets for profit gains within two years. Partnerships like EY and NVIDIA show how physical AI pilots move from lab tests into plants and warehouses. The same pattern is starting in finance and professional services.

- Lawsuits from The New York Times and the Chicago Tribune against Perplexity highlight rising copyright risk around model training. These cases may set ground rules for how far fair use extends. Any enterprise system that trains on third-party data now needs stronger legal review.

- Global demand for training and inference is driving a compute shortage that affects ai news today at every layer. New GPU clusters in regions like Sweden and national deals such as the UK–Canada compute agreement are direct responses. Quantum and analog chips sit in the background as the long-term path for more efficient power.

- AI startup valuations keep climbing, driven by “kingmaking” VC strategies that pour huge sums into very young companies. This fuels innovation but also increases vendor risk for buyers. Enterprises need deeper financial checks before building on top of any new AI platform.

The Enterprise AI Revolution: From Chatbots To Autonomous Agents

The most important piece of ai news today for enterprise leaders is that simple chatbots are no longer the main story. At AWS re:Invent 2025, Amazon declared the chatbot cycle “over” and shifted attention to frontier AI agents. These systems do not just answer questions. They plan, act, and adjust across long workflows with little direct guidance.

Kiro, AWS’s headline agent, codes for extended periods, refactors services, and opens pull requests with only periodic human review. That changes how we think about development teams, sprint planning, and testing. Work once split across several roles can now move as a continuous flow between humans and agents.

Most organizations are not ready for that level of autonomy. Access controls, audit trails, and approval paths are often built for tools that only suggest, not act. At VibeAutomateAI, we advise clients to start with narrow, well-bounded agent use cases, such as internal tooling or documentation clean-up. From there, teams can expand to higher-impact work once they prove safety, reliability, and measurable value.

“AI is going to change how we do everything, but it won’t change what matters: clarity of purpose and clear accountability.” — Adapted from common guidance shared by enterprise CIO councils

AWS Re:Invent 2025: Democratizing Custom AI Development

AWS also used re:Invent to push custom model development closer to the mainstream. New SageMaker features shorten the path from raw enterprise data to tuned large language models, with more automation around data prep, training, and deployment. The stated goal is simple control of models without needing a research lab on staff.

For many teams, the choice now is not “AI or no AI” but custom versus off-the-shelf models. Custom models fit best when:

- Data privacy is non-negotiable.

- Domain language is highly specific.

- Small gains in accuracy move important business metrics.

AWS’s on-prem Nvidia-based “AI Factories” add another option for firms that must keep data in specific regions or facilities.

To decide, we suggest a clear framework at VibeAutomateAI:

- Start with a baseline test using a managed foundation model.

- Estimate gains from further tuning against the cost of data work, training time, and long-term support.

- Move to custom work only when those gains are material to revenue, security, or compliance—not just “nice to have”.

Meta’s Strategic AI Integration Across Platforms

Another strand of ai news today comes from Meta’s push to weave AI into every part of its products. The company is testing an AI support assistant that acts as a single front door for help across Facebook and Instagram. For users, this can mean faster answers and fewer dead ends. For Meta, it concentrates support data in one place to train better models.

At the same time, Meta faces an EU investigation into a policy that blocks rival chatbots from running inside WhatsApp. Regulators are asking whether control over a major messaging platform is being used to limit competition. That question will shape how large platforms host or restrict third-party AI tools.

Enterprises should take two lessons from this:

- Customer-facing AI can cut handling times and raise satisfaction, but only when escalation paths to humans stay clear.

- Any AI strategy that relies on a single dominant platform carries regulatory and business risk.

At VibeAutomateAI, we advise clients to build channel-agnostic assistants that can move between web, mobile, and chat services without locking into one gatekeeper.

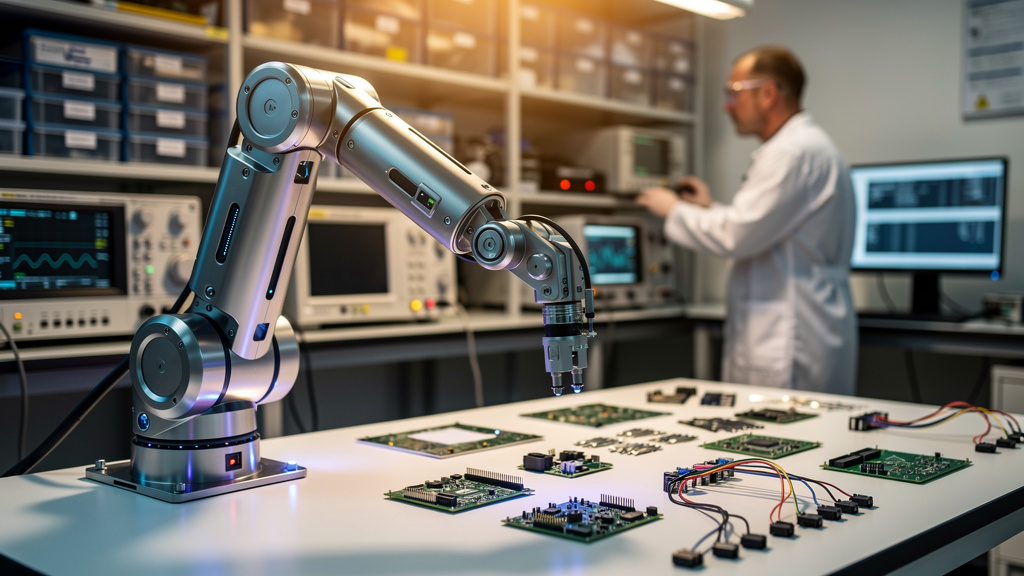

AI’s Sectoral Impact: Manufacturing, Finance, And Cybersecurity

Across ai news today, one number stands out for industrial firms. Many manufacturing executives now plan to spend almost half of their modernization budgets on AI within the next two years. These are not just experiments. They are tied to hard targets on uptime, yield, and margin.

In plants, physical AI projects with partners like EY and NVIDIA focus on:

- Predictive maintenance

- Computer-vision-based quality checks

- Better demand forecasting

Sensors feed models that flag issues before a line fails, watch for defects in real time, and adjust inventory orders with more context. Key metrics include unplanned downtime, scrap rates, and working capital tied up in stock.

In finance and professional services, AI agents take on time-consuming, rules-heavy tasks. Accounting firms use them for invoice coding, basic compliance checks, and first-pass variance analysis. Malaysia’s Ryt Bank goes further as an AI-focused bank, designing core products with automation in mind from day one. In all of these cases, people still make final calls, but AI handles much of the data grinding.

Cybersecurity runs through every sector shift. As systems automate more work, the cost of a breach rises because attackers can now target both data and the agents acting on that data. At VibeAutomateAI, we encourage clients to pair every new operational AI project with a matching security review that covers access, monitoring, and incident response.

Defending Against AI-Orchestrated Cyber Threats

Anthropic’s recent research showed how AI can plan and execute cyberattacks faster than human-led groups. Models can scan for weak points, write convincing phishing emails, and chain exploits together with far less manual effort. That means scale and speed, not just sophistication, are changing.

Security teams need defenses that use AI at least as well as attackers do. Machine learning systems for cloud-native container security can learn normal behavior and flag strange patterns within seconds. Training grounds like HTB AI Range let defenders practice against realistic AI-powered attacks without risking live systems.

We suggest a simple structure when we work with CISOs:

- Map current controls against AI-specific threats such as automated reconnaissance, adaptive phishing, and fast-moving lateral movement.

- Invest in tools that detect and respond automatically where possible, while still keeping humans in charge of final containment decisions.

- Treat this as continuous practice, supported by red-team exercises that now include AI-driven attack paths.

As security expert Bruce Schneier has said, “Security is a process, not a product.” That is even more true when AI can accelerate both attacks and defenses.

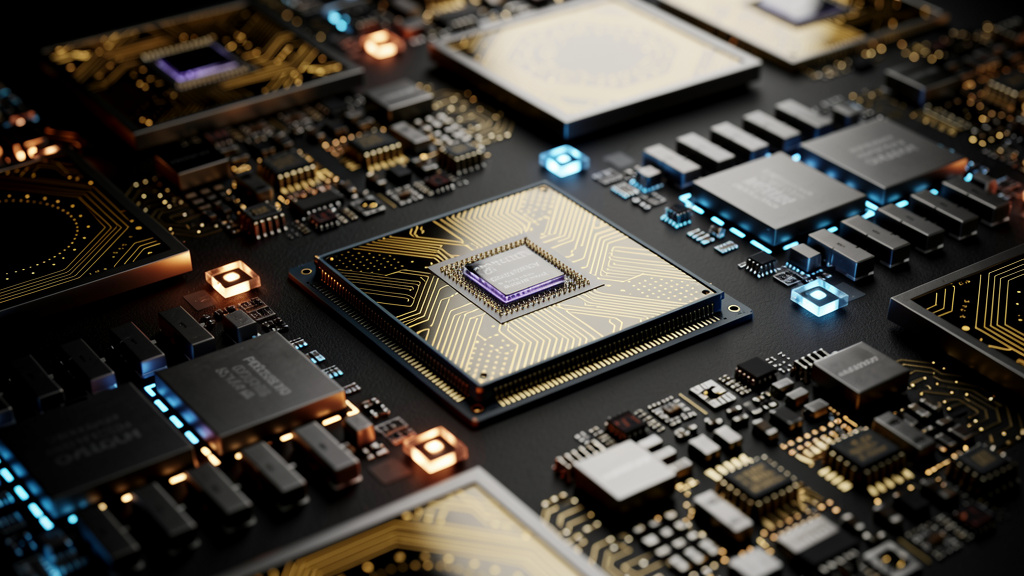

The Hardware Arms Race: Chips, Memory, And Computing Infrastructure

Beneath much of the ai news today sits a basic problem, with reports showing that AI Is Mainstream, But document infrastructure is failing to keep up with the demands of these systems. There are not enough high-end chips to match demand. Training large models and serving them at scale both rely on GPUs and fast memory, and those parts are in short supply.

Micron’s shift away from some consumer segments to focus on high-bandwidth memory for AI servers marks a turning point. Semiconductor planning now follows AI data center needs more than phone or PC cycles. Amazon adds to this change with its own AI chips, which already bring in billions in revenue and reduce its reliance on Nvidia. Other cloud providers follow the same pattern to control costs, tune performance, and steady their supply chains.

New players such as Singularity Compute are building GPU clusters in regions like Sweden to ease the crunch and give enterprises more options. Meanwhile, IBM Research is testing analog AI chips that aim to cut power use for deep learning. For CIOs, these moves shape long-horizon questions: when does it make sense to reserve capacity with one cloud, split across several, or bring some compute on-prem with dedicated stacks?

International Collaboration On Quantum And Next-Generation Computing

While current ai news today focuses on GPUs and memory, governments are working on what comes next, with updates regularly shared through OpenAI News and other major AI research organizations. The United Kingdom and Germany are aligning their science programs to speed up quantum supercomputing. The UK and Canada have struck an agreement around shared AI compute, and the United States and Japan are deepening their AI and tech partnership.

Quantum AI is still mostly in research labs. It promises to solve certain classes of problems, such as complex optimization and materials modeling, that strain classical machines. For most enterprises, the practical impact will arrive over a five- to ten-year window, not in the next budget cycle.

Our advice at VibeAutomateAI is straightforward. Track quantum and analog chip progress as part of long-term infrastructure planning, but do not delay near-term AI projects while waiting for them. Focus current effort on flexible architectures that can adapt if new compute models become accessible through cloud providers later.

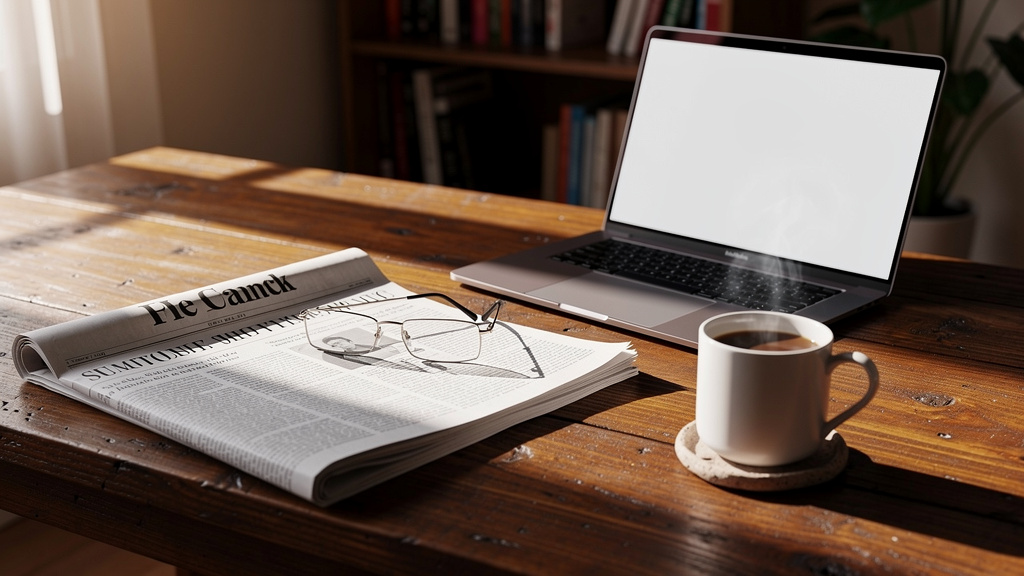

The Content Wars: AI Training Data, Copyright Battles, And Publisher Deals

Content rights sit at the center of many ai news today stories, with recent findings from a Report: AI Use in newspapers showing how widespread yet poorly disclosed AI adoption has become in media. Meta’s switch from cutting news traffic to paying for it is a clear example. After shrinking Facebook News, Meta is now signing multiyear data deals with publishers such as USA Today, CNN, Fox News, People, The Daily Caller, and Le Monde.

When someone asks Meta AI about a current event, the assistant now draws on this licensed material and links back to the source. Including outlets from across the political spectrum also addresses long-standing claims of bias in feed design and curation. For publishers, the deals offer both money and a chance to recover some traffic as users click through for full stories.

In contrast, Perplexity faces lawsuits from The New York Times and the Chicago Tribune over alleged misuse of their content for model training and answers. The outcome will influence how courts see fair use when models copy structure, style, or facts at scale. The strong reaction to the Tony Blair Institute’s report on AI copyright shows how sensitive this topic has become around the world.

For enterprises training internal models, the message is clear. Scraping large amounts of third-party content now carries rising legal and brand risk. At VibeAutomateAI, we recommend:

- Clear sourcing policies

- Greater use of licensed datasets

- Close work with legal teams before any project that touches external text, images, or audio

The Maturing User Adoption Picture

One quieter piece of ai news today is that ChatGPT’s user growth has slowed. Early adopters have already tried it, and new users now compare many tools instead of flocking to one. Expectations are higher, and basic chat alone no longer impresses.

Platforms are responding in different ways. Google is testing a merged interface for AI Overviews and AI Mode in search, trying to keep users inside a blended search and assistant experience. Its Veo 3 video tools are now available more widely, while OpenAI’s Sora has reached markets like Thailand through local apps. Meta is betting that real-time news and social context will pull users toward its assistant.

For enterprises choosing generative tools, the lesson is to judge on fit, not buzz. Compare:

- Depth of domain features

- Security posture and compliance options

- Data controls and retention

- Integration capabilities with your existing stack

At VibeAutomateAI, our side-by-side tool comparisons focus on those factors so teams can move past surface demos and pick tools that will hold up in daily work.

Market Dynamics: Valuations, Venture Capital Strategy, And Bubble Concerns

The money side of ai news today is as intense as the technology side. Startups like Aaru are reaching billion-dollar valuations at the Series A stage. Yoodli has reportedly tripled its value to above three hundred million dollars by helping people improve their communication skills with AI coaches. Micro1, which competes with Scale AI on data and services, has passed one hundred million dollars in annual recurring revenue.

Many venture firms are taking a “kingmaking” approach. They pour very large checks into young companies to give them an edge in hiring talent and booking GPU time. This speeds innovation but also concentrates power in a few hands. Anthropic’s own plans for an IPO and its two hundred million dollar deal with Snowflake show how fast leading players can scale.

At the same time, not every investor is going all in. Nexus Venture Partners, for example, has decided to keep only part of its new fund for AI, spreading the rest across other sectors. Meta’s purchase of Limitless, an AI device startup, and its hiring of former Apple design leader Alan Dye show how large firms also use acquisitions to jump ahead. For buyers, this all raises a simple but serious question: will the vendors they pick today still be there in three years?

Strategic Implications For Enterprise Technology Procurement

Procurement teams now need a deeper playbook when they respond to ai news today with new buying plans. That playbook should cover both technical fit and business stability.

- Start with vendor health checks that go beyond the pitch deck. Look at revenue mix, funding runway, and any signs a startup might be pushed toward a sale. Ask how much of their service depends on credits or discounts from a single cloud or partner.

- Reduce single-vendor dependence wherever possible. Spread critical workloads across more than one AI platform, keep data exports easy, and consider open models for some use cases. This lowers the impact if one supplier changes terms, gets acquired, or shuts down a product line.

- Balance innovation from startups with the steadiness of larger providers. Use newer firms for targeted pilots or features where change is acceptable, while keeping core systems on platforms with long service records. At VibeAutomateAI, we publish detailed vendor comparisons and checklists to support this kind of split strategy.

Regulatory Outlook And International AI Governance

The policy side of ai news today is moving almost as fast as the models themselves. In the United States, industry groups recently failed to block states from writing their own AI rules. With no broad federal law in place yet, companies now face a patchwork of state policies around areas such as hiring, credit scoring, and biometric use.

Internationally, countries are building alliances to guide AI development and share compute. The US–Japan collaboration, the UK–Canada compute deal, and the UK–Germany quantum program all aim to set shared standards around safety, security, and access to hardware. These pacts influence how fast companies in each region can train and deploy advanced systems.

Regulators are also looking closely at platform behavior. The EU probe into Meta’s treatment of rival chatbots on WhatsApp is one clear example of concern about gatekeepers. For enterprises, this means AI plans cannot ignore regulation. Internal governance has to develop side by side with external rules. At VibeAutomateAI, we encourage clients to build internal frameworks now rather than wait for every law to settle.

Building Enterprise AI Governance Frameworks

A solid AI governance model does not need to be fancy, but it does need to be real. We see the strongest programs sharing a few core elements.

- Clear policy that sets what the organization will and will not do with AI. This covers data sources, use in products, internal tools, and third-party access. It should also outline which teams sign off on new uses.

- Defined processes for privacy, transparency, and bias checks. That includes data minimization, strong access controls, documentation of model behavior, and regular audits. It also means testing for unfair outcomes and correcting them through data or design changes.

- Security practices aimed at AI systems, not just classic apps. These include role-based access, adversarial testing, and incident response plans that assume models themselves might be attacked or misused. The frontier AI research lab formed by Thomson Reuters and Imperial College London is one public example of the kind of work needed on safe deployment. VibeAutomateAI provides guidance on linking this governance work to clear measures of cost and value for each generative AI use case.

As many regulators stress, trust in AI depends on “proportional, risk-based governance” rather than one-off paperwork.

Strategic Recommendations: Positioning Your Organization For AI Success

Taken together, ai news today paints a picture of technology that is moving fast and touching every part of the business. The challenge for leaders is to bring order to that motion, starting with an honest view of current readiness.

At VibeAutomateAI, we often suggest mapping use cases into three bands:

- Proven, narrow tasks – document search, coding help, internal chat, and similar use cases where tools are mature and risks are lower.

- Controlled agent use – development, finance, or operations workflows where AI agents act with clear guardrails, approvals, and logging.

- Longer-horizon bets – more ambitious ideas that depend on maturing hardware, models, or regulation and should be treated as structured experiments.

Infrastructure plans should follow the same structure. Decide where to rely on shared public cloud stacks, where to reserve dedicated capacity, and where on-prem stacks or edge devices are needed. Custom LLM work makes sense when it can clearly separate your services from rivals or when strict data rules apply.

Governance and talent round out the picture:

- Set up a cross-functional AI committee that includes legal, security, risk, and business heads.

- Build a vendor strategy that avoids single points of failure.

- Fund ongoing training so both technical and business teams can keep pace with change.

Throughout this process, VibeAutomateAI serves as a reference point with guides, tool comparisons, and playbooks that connect fast-moving news with concrete next steps.

Conclusion

AI is no longer a side project. Across ai news today we see a clear move from narrow helpers to autonomous agents, from small pilots to budget-line items, and from loose norms to real legal and policy fights. These changes touch core systems, customer trust, and long-term competitiveness.

At the same time, the picture is messy. Hardware shortages, sharp swings in startup funding, content lawsuits, and patchy regulation all add friction. Buying the latest tool is not enough. Long-term success depends on clear strategy, tight governance, and teams that can keep learning as the field shifts.

The organizations that thrive will be the ones that can separate real signals from noise and turn headlines into well-scoped projects. They will plan for security at the same time as automation, and they will treat data rights as a first-class concern, not an afterthought.

Our role at VibeAutomateAI is to stay close to the ai news today that matters and translate it into practical action. Through deep guides, structured tool reviews, and step-by-step playbooks, we help leaders move from “What happened” to “What should we do next.” The AI race is speeding up, and staying informed is no longer optional. With the right lens on the news, it becomes a steady source of advantage rather than anxiety.

FAQs

Question 1: What Are Frontier AI Agents And How Do They Differ From Chatbots?

Frontier AI agents are systems that can plan and carry out complex, multi-step tasks with limited human input. They do not just respond to prompts. They decide what to do next, call tools, and adjust when they hit errors. Chatbots, by contrast, are mostly reactive and stay inside a single conversation. AWS’s Kiro agent, which can code for days at a time, shows where this is heading. For enterprises, agents can expand workforce capacity far beyond simple question answering.

Question 2: Should My Organization Invest In Custom AI Models Or Use Off-The-Shelf Tools?

The choice depends on your goals and constraints. Custom models make sense when your data is very sensitive, your domain language is highly specific, or small accuracy gains will move key metrics. Off-the-shelf models offer lower upfront cost, faster launch, and simpler operations. Many teams now start with a managed model, then explore tuning once they see real usage. AWS’s new SageMaker features reduce the effort of custom work, but the business case still matters. VibeAutomateAI’s comparison guides can help weigh options for your situation.

Question 3: How Should We Prepare For AI-Orchestrated Cyberattacks?

AI-driven attacks raise the stakes by automating discovery, social engineering, and exploitation. Preparation starts with accepting that manual defenses alone are too slow. Invest in AI-based detection that looks for unusual behavior in accounts, containers, and networks. Give security teams practice in sandboxed ranges such as HTB AI Range, where they can face realistic AI-assisted threats. Add regular red-team exercises that use AI tools to probe your own defenses. Over time, aim for faster, more automated response while keeping humans in charge of key decisions.

Question 4: What Does Meta’s Reversal On News Partnerships Mean For AI Training Data Strategy?

Meta’s new deals with news publishers show that paying for high-quality data is becoming standard practice. This approach reduces legal and reputational risk compared with scraping large amounts of content without permission. Lawsuits against Perplexity by The New York Times and the Chicago Tribune underline how serious this risk has become. Enterprises should respond by building clear data sourcing rules, favoring licensed or internal data, and involving legal counsel in any project that touches third-party content. That keeps innovation and compliance moving together.

Question 5: Are We In An AI Bubble, And How Should This Affect Our Technology Investments?

There are signs of a bubble in parts of the market, with soaring valuations and very aggressive funding rounds. Leaders like Anthropic have noted the high level of risk some players are taking. At the same time, the real business impact of AI on automation, security, and analytics is hard to ignore. The best response is discipline. Focus on projects with clear, measurable value, and apply strict vendor due diligence, especially with young startups. Aim for a balanced mix of established platforms and newer entrants. VibeAutomateAI’s objective analysis can help separate lasting trends from short-term market excitement.

Stay connected