Introduction

Scroll through any feed of AI news today from sources like AI News & Artificial Intelligence coverage and it feels like the ground is shifting under our feet. New models, new chips, new rules, and new lawsuits all land at once, while real teams still have tickets to clear and projects to ship. The gap between flashy headlines and what actually matters for daily work can feel wide.

We see the same pattern across the organizations we talk with at VibeAutomateAI. IT leads, security teams, and business owners want to use AI for real gains, not just experiments that never leave a slide deck. At the same time, they worry about cost, compliance, bias, copyright, and vendor risk. It is hard to sort out which AI news today requires action and which items are just noise.

In this article, we walk through the most important developments behind today’s headlines and turn them into clear, practical insight. We look at enterprise integration, the hardware race, regulation and copyright fights, consumer apps, and ethical risks. Along the way, we point out what matters for planning roadmaps, choosing vendors, and shaping internal AI governance. By the end, we want every reader to feel ready to connect AI news today with concrete steps for their own organization.

Key Takeaways

The news cycle can feel fast, so this section gives a quick snapshot before we dig deeper. Each point links to a real decision or risk that teams can act on right now.

- Enterprise AI is moving from pilots into core workflows, which changes how teams plan projects, budget for tools, and design processes around sustained automation instead of one‑off experiments.

- The race for AI hardware is driving new chips, memory, and even quantum research, so infrastructure choices now need to balance cost, energy use, and long‑term vendor dependence instead of looking only at raw power.

- National and regional regulators are pulling in different directions, which means organizations must track both federal rules and local pressure while they design internal AI policies that can survive future changes.

- Publishers are testing the courts while platforms sign data deals, so every team that uses generative tools needs a clear view of training data risks, licensing promises, and how its own content might be reused by others.

- Deepfakes and biased models are no longer edge cases, and rising investment in detection and fairness tools shows that ethics and security are now core parts of any serious AI deployment plan.

- Large funding rounds and high‑profile takeovers show strong investor belief, even while usage growth slows for some chat tools, which is a sign that the market is maturing and shifting toward focused, high‑value use cases.

Andrew Ng has described AI as “the new electricity,” a reminder that the real story is not the headlines themselves, but how widely and quietly AI seeps into everyday work.

AI Enters The Enterprise Core: The End Of The Pilot Era

When we look at AI news today on the enterprise side, including developments from OpenAI News, one message stands out: pilots are no longer the main story. OpenAI reports that many large customers have moved from sandbox tests to deep, day‑to‑day use inside key workflows. That means AI is now touching finance, supply chains, customer support, and product work rather than sitting in a lab.

Cloud providers tell the same story. At re:Invent, AWS said the chatbot phase is fading and pushed the idea of “frontier AI agents.” In plain terms, these are systems that take a goal, plan steps, call multiple tools, and report back when the job is done. Instead of asking a bot for a single answer, teams start to give an agent targets such as closing tickets, tidying data, or drafting code fixes.

Google is driving a similar push with Gemini Enterprise, which aims to put a capable AI helper on every employee’s desk. If that vision lands, workers will expect quick help with email, research, drafts, and summaries inside the apps they already use. Combined with Anthropic’s Claude Code inside Slack, we now see AI woven into the daily tools of software and business teams, not just added as a separate chat tab.

Sector examples make this shift very real:

- Accounting firms and other sectors are seeing similar trends, with companies like Artificio Launches Industry-Specific AI models for document processing in mortgage, insurance, legal, and HR, using agents to pull data, prepare working papers, and flag anomalies so staff can focus on advice instead of manual checks.

- Manufacturers are feeding models with sensor and supply data to reduce downtime and keep stock levels closer to need.

- The Anthropic and Snowflake deal, worth two hundred million dollars, goes even deeper by placing large models next to sensitive data inside the data cloud itself.

For readers, the key question is not whether AI news today sounds exciting, but whether their own shop is ready to move past pilots. A simple self‑check helps:

- Start by listing current AI experiments and asking whether they tie directly to a clear business metric such as cycle time, error rate, or revenue, since vague goals rarely justify long‑term spend.

- Look at data access and security, and test whether an AI system could safely read the records it needs without breaking internal rules or creating new audit pain for security and compliance teams.

- Review skills and process, and note whether there are clear owners, training plans, and playbooks so that tools do not sit unused or create shadow workflows outside existing controls.

As Satya Nadella has argued in several keynotes, AI should be woven into every business process, not treated as a side experiment.

At VibeAutomateAI, we focus on these kinds of concrete checks in our guides, because they show when it is time to scale and when a pilot should stay in the lab.

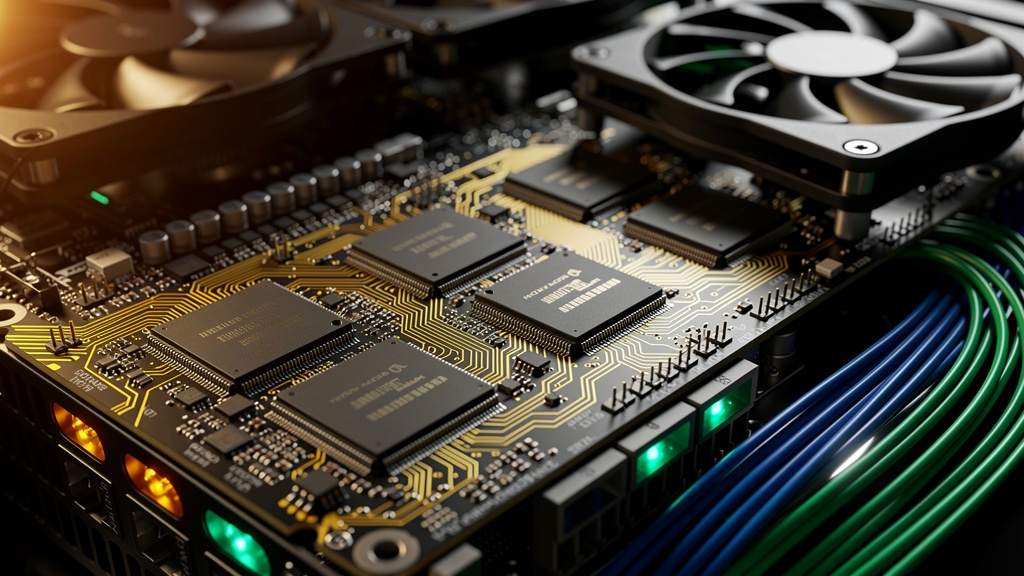

The AI Hardware Arms Race: Power, Efficiency, And Geopolitics

Behind much of the AI news today sits one hard fact: big models need huge compute, and that demand shapes both pricing and policy. The recent decision by the US Commerce Department to allow Nvidia’s H200 chips to ship to China brought this into sharp focus. Export rules are now a lever in both tech policy and foreign policy.

Chip makers see the stakes. Intel’s CEO has talked about keeping Moore’s Law on track, helped by federal support for advanced manufacturing. AWS highlights that its home‑grown chips, built as an option beside Nvidia hardware, already power a multibillion‑dollar business. When cloud vendors design their own silicon, they gain more control over performance, supply, and pricing.

New designs aim to cut energy use as well as boost speed, though research shows AI is saving time and money in research while raising questions about energy costs. IBM Research announced an analog AI chip that performs deep‑learning‑style work with far lower power needs than standard digital chips. At the same time, memory makers feel the strain. Micron has shifted focus toward high‑bandwidth memory for AI data centers because model training pulls far more memory than consumer devices.

Beyond classical hardware, governments are teaming up on future compute. The UK and Germany plan joint work to bring quantum supercomputing into commercial use. If that succeeds, some problems that now take days on GPU clusters could fall in minutes, which would reshape what is practical for research and high‑end analysis.

For technology leaders, this hardware race raises practical planning issues:

- When we choose a cloud provider, we now have to look at its custom chips, network, and memory roadmap, since those choices will shape cost and capacity over several years.

- On‑premises builds should factor in power, cooling, and rack space in a more serious way, because high‑end AI gear often strains older facilities and raises both energy bills and environmental concerns.

- We should also keep an eye on contract terms that tie us tightly to one vendor’s hardware path, balancing short‑term gains against future bargaining power and resilience.

VibeAutomateAI tracks these shifts so that our readers can connect hardware news with real infrastructure and vendor plans instead of guessing.

Federal Versus State Control: The AI Regulation Showdown

Not all AI news today is about chips and models. Policy fights are heating up as well. In the United States, President Donald Trump has said he plans an executive order that would block state‑level AI laws and set one federal rule instead. Supporters claim that a single standard would make compliance easier for companies that work across many states.

Lawmakers on both sides of the aisle push back. Some argue that states should keep room to act on local concerns such as biometric use, education, or hiring rules. Others worry that a single national rule might lean too far toward industry wishes and move too slowly as new risks appear. The result is a tug of war between consistency and local control.

A patchwork of state laws could force multi‑state firms to juggle different data rules, audit needs, and reporting standards. On the other hand, a heavy central rule could freeze certain uses across the country or crowd out thoughtful local experiments. Outside the US, similar tensions play out in other ways. The European Union is investigating Meta over a WhatsApp policy that blocks rival chatbots, framing it as a possible competition issue. Deals such as the UK and Canada agreement on AI compute, and the US and Japan partnership on AI and research, show that governments also see AI as a shared strategic area.

For organizations, this means AI governance cannot wait for perfect clarity:

- Begin by mapping where your AI tools touch personal data, high‑risk decisions, or safety‑critical systems, since those areas tend to attract faster and stricter rules.

- Align internal policies with existing privacy and security standards, which gives a solid baseline that will likely match many future AI‑specific rules.

- Assign clear owners across legal, security, and IT who track AI news today on policy and adjust controls and documentation as laws shift.

Legal scholar Danielle Citron often notes that technology policy is really about deciding “who bears the risk when things go wrong” — a question every AI program has to answer explicitly.

This is the type of practical, cross‑functional approach we explain often at VibeAutomateAI, because it keeps teams ahead of compliance shocks.

The Copyright Battlefield: Publishers Fight Back Against AI Training

One of the sharpest conflicts in AI news today sits at the crossroads of models and media. The New York Times and the Chicago Tribune have both sued Perplexity AI, saying that its systems used their articles without permission and then produced outputs that compete with their work. In simple terms, they accuse the company of copying value without paying for it.

These cases ask a hard legal question: when a model ingests public web pages, is that fair use or is it copyright abuse? Studies like the Largest study of its kind show AI’s systemic distortion of news is consistent across languages and territories, raising concerns about how models process and reproduce content. Courts now have to sort out how old rules about copying, quotation, and reference apply when a machine absorbs billions of tokens and then writes new text that may echo or summarize the source material.

Not every player is choosing the legal path. Meta has signed paid data deals with several publishers in order to feed real‑time news into its Meta AI assistant. That route offers a more cooperative model in which rights holders receive money and recognition in exchange for controlled use of their content. A separate policy report on AI and copyright from the Tony Blair Institute set off strong criticism, showing how split experts are over what a fair balance looks like.

For businesses, these fights matter on two levels. First, there is risk in using tools whose training data or retrieval methods may be challenged, especially for high‑profile or public content. Second, every company that produces articles, images, or video must decide whether and how it will allow others to train on that material.

Content and marketing teams can respond in several practical ways:

- Ask vendors direct questions about training data sources, opt‑out policies, and any licensing deals, then record those answers for legal and compliance teams to review.

- Set clear rules for staff who use generative tools, including human review, fresh fact checks, and limits on where AI‑drafted text can appear without editing.

- Review terms of service on hosting and social platforms to see whether company content can be reused for training, and update publishing strategies if that exposure feels too high.

Lawrence Lessig has long reminded creators that “fair use is a right,” a phrase now being tested again as courts weigh how models consume and reproduce content.

At VibeAutomateAI, we pay close attention to this side of AI news today, because it shapes which tools are safe for enterprise use and how creators can protect their work.

Generative AI Goes Mainstream: From Groceries To Dating

Not all progress sits inside data centers or boardrooms. A big slice of AI news today shows up in daily habits, as more consumer apps add generative features on top of familiar tasks.

Some prominent examples include:

- Instacart + ChatGPT: Inside a chat, a person can plan a meal, get a recipe, and send the full grocery list for delivery in one flow. That is more than a chat add‑on; it shows purchase actions moving inside conversations.

- Hinge conversation prompts: Hinge uses AI to craft fresh “Convo Starters” so people can move past dry openers and get into real chats faster.

- Google Doppl for shopping: Google’s Doppl app brings AI to online shopping with a virtual try‑on and a shoppable feed, letting people see how items may look before they buy.

- Aluminium OS: An effort called Aluminium OS aims to move beyond ChromeOS by weaving AI into the heart of the system, so that the device can act more like a helper than a passive tool.

- ByteDance smartphones: ByteDance is exploring smartphones that can run agent‑like features which manage apps, suggest actions, and take care of small tasks with little input.

- Apple’s top‑app lists: Apple’s yearly list of top apps now features many titles with strong AI features, showing that regular users have started to expect these abilities in the tools they pick.

For enterprises, this consumer trend matters. Employees arrive at work already used to smart suggestions and quick automation, and they bring those expectations to company systems. When we read AI news today about consumer apps, it is really a preview of design patterns that will soon press into business software as well.

Confronting AI’s Dark Side: Deepfakes And Algorithmic Bias

The same advances that drive positive AI news today also power real risks. Deepfakes are one clear example. With current tools, a small group can create audio or video that looks and sounds like a real person, then spread it at scale. That puts individuals, brands, and even elections at risk.

Major firms have started to invest in defense. Google, the Sony Innovation Fund, and Okta have backed Resemble AI, a company that works on deepfake detection. Money flowing into this area shows that leaders now treat synthetic media as a security and trust problem, not just a curious trick. Detection alone is not enough, but it is an important layer in a broader response.

Bias is a second major concern in AI news today, with research revealing Why AI still struggles to separate facts from belief in ways that reflect true human understanding. When training data reflects past unfairness in finance, hiring, or justice, models can repeat those patterns. A recent contest award for a project that reduces bias in digital payments shows how much attention this topic now receives. The stakes are high, because biased systems can deny loans, jobs, or services in more silent and scaled ways than old manual processes.

Some vendors are starting to open the curtain on their safety work. Google has shared details about security protections for new agent features in Chrome, which is a step toward the kind of transparency regulators and users ask for. Still, the main duty sits with each organization that deploys AI inside its own stack.

Security and compliance teams can take several clear steps:

- Build an AI asset register that lists every model or feature in use, what data it touches, and which business decisions it shapes, so that audits and reviews cover the full picture.

- Establish bias tests on key outputs, especially for finance, hiring, and access control, then repeat those tests on a regular schedule and after every major model change.

- Put deepfake risks into incident response plans, including how to verify suspect media, how to brief staff, and how to respond if attackers use fake content against the company.

As the old Spider‑Man line puts it, “With great power comes great responsibility” — a good summary of the balance between AI capability and safety.

At VibeAutomateAI, we frame ethics, bias, and security as normal parts of AI design, not add‑ons, and we bring that lens to how we read AI news today.

Market Dynamics: Billion‑Dollar Valuations And Strategic Acquisitions

Funding headlines in AI news today can look wild, but they also hint at where value is moving. Aaru, a startup focused on synthetic research, reached a one‑billion‑dollar valuation in its Series A round. Yoodli, which offers AI‑based communication coaching, tripled its value past three hundred million dollars. These numbers tell us that investors believe in focused tools that solve clear, narrow problems.

On the services side, Micro1 has passed one hundred million dollars in recurring yearly revenue by offering data and engineering capacity for AI work, putting it in the ring with firms like Scale AI. That is a sign that many companies prefer to buy expert help rather than build every data and labeling pipeline on their own.

Big tech players keep reshaping the field through takeovers and hires. Meta bought Limitless, a firm working on AI devices, showing clear interest in hardware as well as software. Meta also hired Alan Dye from Apple to lead a creative studio inside Reality Labs, which highlights the intense competition for top design talent in AI and virtual reality.

At the same time, not every number climbs. Reports show that ChatGPT user growth has slowed from its early spike. Leaders such as the CEO of Anthropic talk openly about the risk of an “AI bubble” and point to rivals taking aggressive bets. For practitioners, this mix of high funding and early signs of cooling leads to a simple test: when we read AI news today about money, we should ask whether a product ties to a real, repeatable business use before we commit our own budgets.

Conclusion

Across all the AI news today we have walked through, one pattern stands out. AI is no longer a side project or a far‑off promise. It sits in core business workflows, chips, phones, browsers, and policy debates. Experiments are giving way to long‑term systems that shape how teams work and how data flows.

This shift brings fresh pressure. Hardware choices tie into geopolitics and cost. Regulations pull between local and national control. Courts and contracts reshape how content feeds models. Deepfakes and bias push security and ethics into every design choice. The gains are real, but so are the new duties placed on teams that run and govern AI.

At VibeAutomateAI, our goal is to connect AI news today with practical steps that readers can use right away. We focus on guides, checklists, and clear explanations that link headline events to project plans, governance frameworks, and day‑to‑day workflows. If this kind of analysis helps, we invite readers to follow our updates and use our tutorials as a reference. With the right information, professionals can do more than react to the news. They can shape how AI is used inside their own organizations with confidence and care.

FAQs

Question 1: What Does “Agentic AI” Actually Mean And How Is It Different From Chatbots?

When we talk about agentic AI, we mean systems that can accept a goal, break it into steps, call the right tools, and report back after tasks are done. A standard chatbot mostly answers direct questions, one exchange at a time, inside a single chat window. An agent might read a ticket queue, update records, send emails, and log results without more human prompts. In short, it behaves more like a junior assistant than a simple answer box.

Question 2: Should My Organization Wait For Federal AI Regulations Before Implementing AI Systems?

We do not suggest waiting, because that puts teams at risk of falling behind while rules are still under debate. Instead, we recommend building strong internal AI policies that match current privacy, security, and data protection laws. Those policies should stress clear consent, limited data use, and strong logging and oversight. With that base in place, it is easier to adjust when federal rules land, no matter how the final details look.

Question 3: How Can We Make Sure Our AI Systems Don’t Perpetuate Bias?

We start by pushing for training data that reflects the full range of people and cases we serve, not just easy or historic samples. From there, we test outputs often, looking for patterns that treat groups unfairly, especially in areas such as loans, hiring, or discipline. High‑stakes uses should always include human review so that no one faces a major decision from a model alone. Cross‑team ethics groups can review findings and guide fixes so that bias issues do not sit only with engineers.

Question 4: What Are The Copyright Risks Of Using Generative AI Tools In Our Business?

Right now, courts are still sorting out major copyright cases, so we need to treat this area with care. We advise checking what each vendor says about training data sources and licensing deals, and favoring tools that have clear, written terms. Human review of AI output is vital for public or customer‑facing work, both for accuracy and for legal comfort. We also suggest tracking legal AI news today so that contracts and internal rules can be updated as new court decisions arrive.

Question 5: Is Investing In Custom AI Hardware Worth It For Mid‑Sized Enterprises?

For most mid‑sized firms, AI platforms such as VibeAutomateAI, together with cloud‑based AI services from providers like AWS, Google, or Microsoft, give better value and flexibility than buying custom hardware. High capital spend on chips and data centers only makes sense when workloads are huge, steady, and very specific. We recommend a clear cost and risk review that compares cloud, hybrid, and on‑premises options over several years. In many cases, the smarter move is to focus on picking strong use cases and good partners rather than owning the metal.

Stay connected