Introduction to AI in Robotics

The numbers around AI in robotics already read like science fiction. In 2023, the AI robotics market stood at 15.2 billion dollars. Projections push that figure to 111.9 billion dollars by 2033, with growth over 22 percent each year and more than 3.5 million industrial robots already at work around the planet.

Most of those machines began as simple automation, though recent advances in AI, robotics, and automation are pushing capabilities far beyond repetitive tasks. A robotic arm that repeats the same weld all day is useful, but it does not think. Robotics gives us the physical body. Artificial intelligence supplies the digital brain. When we combine them, AI in robotics stops acting like a scripted tool and starts behaving more like a partner that can sense, decide, and adapt on its own.

“Robotics and AI are at their best when people stay at the center of the system.” — VibeAutomateAI

That shift is what interests us at VibeAutomateAI. We focus on turning complex tech topics into clear, practical guidance that real teams can apply. In this guide, we walk through how AI in robotics actually works, which core technologies sit under the hood, how nine major industries already depend on it, what roles robotics engineers play, and where this field may go next with AGI. By the end, readers gain both a big-picture view and a grounded sense of how to put these ideas to work.

Key Takeaways on AI in Robotics

This guide covers a lot of ground, so it helps to see the highlights up front. These points show what readers gain by spending time with this expert overview of AI-powered robotics:

-

Readers see a clear split between robotics as the physical machine and artificial intelligence as the decision-making brain. This clarity makes it easier to spot where AI in robotics adds new value, instead of thinking of every robot as the same kind of system. With this mental model, teams can plan smarter automation projects.

-

We walk through seven core technologies that power modern AI in robotics, including machine learning, computer vision, natural language processing, edge computing, cobots, predictive maintenance, and digital twins. Each one comes with simple examples that link directly to real factory floors, warehouses, and service settings. Readers finish with a mental toolkit instead of a vague buzzword list.

-

The article shows how AI in robotics already drives change across agriculture, aerospace, automotive, healthcare, food service, manufacturing, logistics, the military, and even home products. Concrete examples tie this progress to business impact such as less downtime, safer work, and higher output. This helps leaders connect technical choices to real returns.

-

We look at the work of robotics engineers who design, build, and improve these systems over time. Their mix of programming skill, system thinking, and safety oversight shows what kind of talent modern AI in robotics needs, and what career paths are opening.

-

We close with the longer view where embodied AI and constant sensory feedback may push the field toward AGI. Rather than chasing science fiction, we show how projects such as MIT’s speech-to-reality research line up with this path, so readers can track what is marketing spin and what is real progress.

Understanding the Fundamentals – What Sets AI in Robotics Apart

Before AI in robotics makes sense, the two halves need to stand on their own.

Robotics deals with machines that can move in the physical world. Engineers design, build, and program arms, wheels, grippers, and sensors so that a robot can perform a job on its own or with limited help from a human. A classic example is a robotic arm on an assembly line that places a door on every car with the same motion hundreds of times per hour.

Artificial intelligence, on the other hand, is about software that can mimic parts of human thought. AI systems study data, notice patterns, learn from past results, and make choices. This shows up in many places that have nothing to do with mechanical robots, such as voice assistants, fraud detection, or navigation tools in autonomous vehicles.

When we bring these fields together, AI-driven robots stop being fixed scripts and become flexible agents. Sensors send streams of data about position, sound, temperature, and images into AI models that can react in real time. The robot can dodge an obstacle, adjust its grip on a fragile object, or change its route through a warehouse without waiting for a human programmer. For industries that depend on precision, safety, and fast decisions, that combination is now vital. At VibeAutomateAI, we focus on showing how to move from theory to working systems that join physical hardware with practical AI logic.

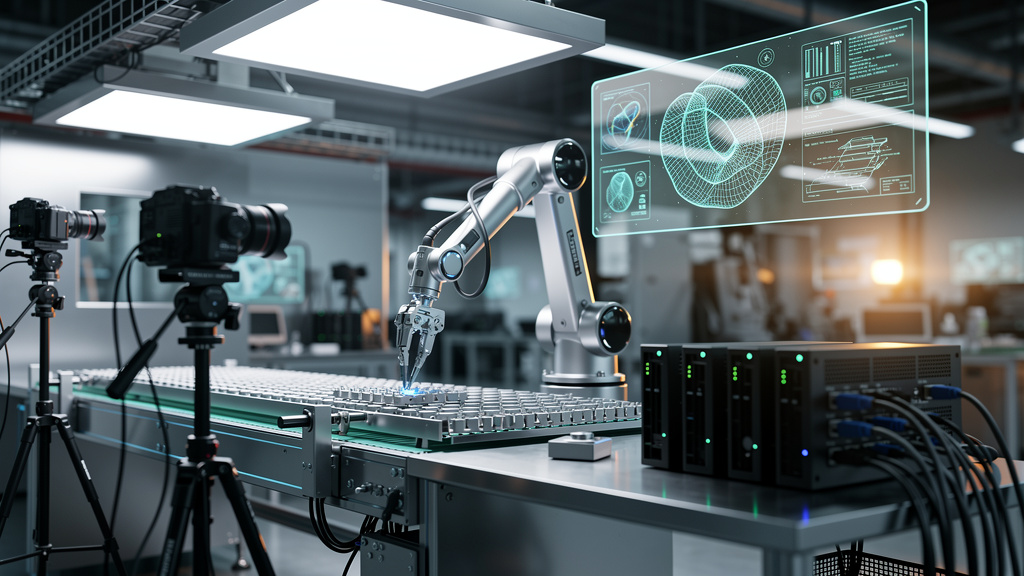

The Seven Core AI in Robotics Technologies Powering Modern Systems

When we talk about AI in robotics, we are really talking about a set of building blocks that work together. This group of technologies turns simple machines into systems that can sense, decide, and improve. They also explain why the AI robotics market is set to rise from 15.2 to 111.9 billion dollars in a single decade.

Machine Learning (ML)

Machine learning sits at the center of most modern AI in robotics. Instead of asking engineers to script every case, ML models learn from data and experience.

Typical uses include:

- A robot on an assembly line studying thousands of past cycles and then adjusting its movements for better speed and accuracy.

- Mobile robots in a warehouse comparing many trips and gradually picking faster, safer paths between shelves.

The key advantage is that performance improves over time without constant manual reprogramming.

Computer Vision

Computer vision gives AI in robotics the ability to see the world. Cameras and depth sensors feed images into AI models that detect shapes, colors, and defects.

For example:

- On a factory line, vision systems let robots check each product for flaws far faster than a person could manage.

- In the field, drones fly over bridges, solar farms, or crops and spot cracks, damage, or pests from the air.

For self-driving cars and delivery robots, computer vision is central to staying in the lane, spotting people, and handling busy streets.

Natural Language Processing (NLP)

Natural language processing allows AI in robotics to work with human speech and text. Instead of pressing buttons or writing code, people can talk to a robot in everyday language.

You see this when:

- In a store or bank, service robots use NLP to understand questions, fetch account details, and give clear answers.

- In hospitals, humanoid helpers respond to patient requests and pass clear summaries to nurses.

- On factory floors, simple voice commands guide many robots, trimming training time and making control more flexible.

Edge Computing

Edge computing changes where AI in robotics does its thinking. Rather than sending every sensor reading to a distant cloud server, the robot processes most data on its own chips or on a close gateway.

Local processing:

- Cuts delay, which matters when a robot arm must stop the instant a person steps too close.

- Keeps mission-critical robots working even when network links drop.

For fast-moving manufacturing lines, mobile cobots, and remote inspection robots, this ability to think at the edge is now common practice.

Collaborative Robotics (Cobots)

Collaborative robots, or cobots, are built to share space with people. AI in robotics helps these machines notice human presence, estimate how nearby staff might move, and slow or stop to avoid contact.

Cobots often:

- Take on tasks that are heavy, boring, or demand steady force, such as lifting parts, sanding, or placing components.

- Let people nearby handle setup, fine adjustments, and checks that still need human judgment.

This mix keeps workers safer while raising total output.

Predictive Maintenance

Predictive maintenance uses AI in robotics to keep systems working longer and with fewer surprises. Sensors on motors, joints, and tools feed data on vibration, heat, and current draw into analytics models. Those models spot early signs of wear long before a part fails.

That allows maintenance teams to:

- Plan short service windows instead of facing sudden breakdowns that stop a line for hours.

- Cut spare part waste and keep uptime high across factories, warehouses, and utilities.

Digital Twin Technology

Digital twins create virtual copies of real robots, lines, or even whole plants. In AI in robotics, teams build a detailed software model of the environment and then let the robot practice in that digital space.

This digital model helps teams:

- Let the robot try thousands of moves, fail safely, and refine its behavior before it ever touches real equipment.

- Shorten setup time and make it easier to test edge cases such as rare errors or emergency stops.

For complex or hazardous settings, digital twins are becoming a standard step before live deployment.

Nine Industries Changed by AI in Robotics

AI in robotics has moved far past the lab, with research showing the impact of AI-powered robotics on workforce dynamics and productivity across multiple sectors. It now shapes work in fields as different as farming, surgery, air travel, and home cleaning. The examples below show how wide this shift already is and why many leaders treat intelligent robots as a core part of their future plans.

Agriculture For Precision And Sustainability

In farming, AI in robotics helps growers raise yields while using fewer inputs. Precision weeding robots such as Ecorobotix scan fields with computer vision and spray only the weeds, which sharply cuts herbicide use. Platforms such as Burro follow pickers through orchards and carry full trays, easing physical strain and closing labor gaps. Together, these tools support more sustainable and profitable farms.

Aerospace For Precision Engineering And Safety

Aerospace work often happens in places that are hard or unsafe for people. AI in robotics allows NASA and others to use robotic arms during spacecraft assembly and service where conditions are harsh. Inspection drones with AI models fly around aircraft, scanning wings and fuselages for cracks or signs of fatigue. This mix improves safety records and reduces the time jets sit on the ground for manual checks.

Automotive With Smart Manufacturing And Autonomous Vehicles

The car industry has long used robots, and AI in robotics takes that to a new level. In gigafactories, companies such as Tesla rely on AI-guided arms and movers for welding, painting, and assembly with fine tolerances and high speed. On the road, AI handles perception, planning, and control for self-driving systems, reading the scene and making split-second choices. New model types such as vision transformers help vehicles build rich maps of their surroundings and support safer autonomy.

Food Service For Automation And Consistency

Restaurants face tight margins and staff shortages, which makes AI in robotics very attractive. Miso Robotics built Flippy, a robot that handles hot, repetitive grill and fryer tasks with steady quality. In some dining rooms, tracked or wheeled robots move dishes from kitchen to table in a smooth flow. These systems keep food quality steady, cut kitchen stress, and free staff for personal service that guests remember.

Healthcare With Precision Medicine And Patient Support

Healthcare shows some of the most advanced AI-assisted robotics at work. The da Vinci Surgical System lets surgeons control robotic instruments with fine, stable motion that supports smaller cuts and shorter recovery for patients. Robotic exoskeletons help people who are re-learning to walk by guiding limbs and giving instant feedback on progress. In both cases, AI models read sensor data and adjust support on the fly, which leads to better clinical results and less strain on staff.

Household Products For Smart Home Innovation

At home, AI in robotics turns basic gadgets into helpful assistants. The Roborock Saros Z70 vacuum has an AI-guided arm that lifts socks, cables, and other small items off the floor instead of getting stuck. That small change means far fewer stalled cleaning runs and less babysitting by the owner. As these features spread, more people see smart home robots as everyday tools rather than novelties.

Manufacturing For Industry 4.0 And Smart Factories

Modern factories are moving toward connected, data-driven plants, and AI in robotics sits at that center. Systems such as the Metalspray PipeID Rover move through pipes, scan metal thickness, and apply protective coatings in places that would be risky for a person to reach. Vision systems on lines check each part at high speed and kick out defects before they ship. These steps raise worker safety, cut scrap, and hold product quality steady across large volumes.

Military For Operational Support And Risk Reduction

Defense work often means entering places that are dangerous by design. AI in robotics supports soldiers by taking on some of that risk. Drones and unmanned ground vehicles patrol borders, scout ahead, or bring supplies to forward positions while human teams stay back. Specialized robots manage explosive devices with mechanical arms guided by AI, which keeps experts out of direct blast zones. This use of machines lowers human casualties while still getting the job done.

Postal And Supply Chain For E-Commerce Optimization

E-commerce demands fast, accurate movement of goods, and AI in robotics helps logistics firms keep up. Robots such as Boston Dynamics Stretch roll through warehouses, use computer vision to pick boxes from stacks, and place them on belts or pallets. On city streets and campuses, compact delivery robots handle the last short leg from local hubs to doorsteps. These tools speed sorting, trim delivery times, and make it easier to scale operations during heavy seasons.

Breakthrough Innovation – MIT’s AI in Robotics Speech-to-Reality System

AI in robotics is also moving into areas that once sounded like pure fantasy. A research team at MIT built a speech-to-reality system that lets a person request a simple object by voice and then watch a robot build it from modular parts in only a few minutes. Instead of learning 3D design tools or robot programming, the person just states what is needed and the system does the rest.

The workflow has several clear stages that tie language, AI models, and physical assembly into one chain:

-

Speech capture and understanding

Speech recognition captures the spoken request and converts it into text with enough detail to act on. -

Language analysis

A large language model reads that text and extracts the intent, such as the type of furniture, shape, and basic size. -

3D design generation

A 3D generative model designs a digital mesh of that object that fits the request. -

Conversion to building blocks

Another algorithm breaks the mesh into voxel-style building blocks that match the small physical modules the robot can handle. Geometric checks adjust the plan so that it stays stable, uses parts that are actually on hand, and connects without weak points. -

Motion planning and assembly

A path planning system chooses how the robotic arm should pick each piece, move through space, and assemble the object without collisions. The result appears as an assembled stool, shelf, chair, table, or decorative shape in just a few minutes.

“The best interfaces feel like a conversation rather than a programming task.” — VibeAutomateAI

This approach offers several clear gains over 3D printing. Build times drop from hours or days to minutes, and parts can later be taken apart and reused in new forms rather than thrown away. That fits well with a more circular view of manufacturing. As the team adds better connectors, small mobile builders, and gesture input, projects like this show how AI in robotics may let people shape their physical spaces far more directly. At VibeAutomateAI, we follow work like this closely so we can explain its real impact for teams planning future plants and products.

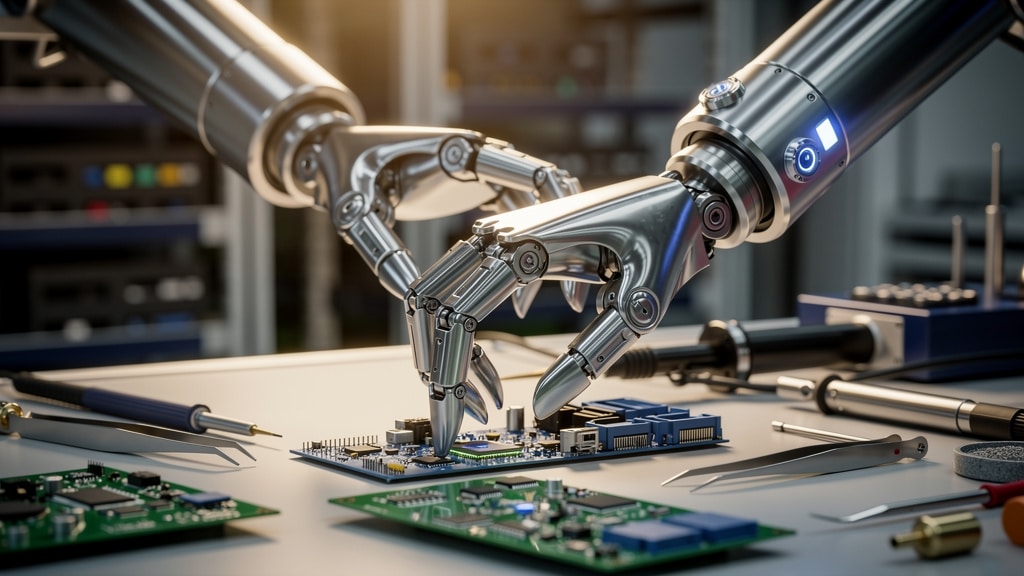

The Human Element – Career Path of an AI in Robotics Engineer

Behind every real deployment of AI in robotics stands a group of robotics engineers. These specialists carry a system from first sketch through live rollout and into years of updates. They think about mechanical design, sensor placement, control loops, and AI models, but they also balance safety rules, maintenance needs, and business goals.

On a normal day, a robotics engineer might:

- Design and test a new end effector for a pick-and-place robot, then tune motion profiles so it hits the right speed without shaking parts loose.

- Review sensor logs to spot causes of small errors and refine control software.

- Plan predictive maintenance steps before wear turns into failure.

- Work with operations, safety, and IT teams to align robots with real-world constraints.

Many also help shape AI governance for their organizations by setting rules for how data from AI in robotics is used, stored, and audited.

Most roles in this field call for at least a bachelor degree in computer engineering, computer science, electrical engineering, or a similar area, and many employers favor a master degree. Fluency in languages such as Python and C or C plus plus, strong skills in algorithm design, and a calm, systematic approach to problem solving all matter. Since tools and methods move fast, good engineers keep learning and testing new ideas.

“The best way to predict the future is to invent it.” — Alan Kay

As of early 2023, average pay in the United States sits near 100,205 dollars each year, which reflects strong demand for people who can join AI and physical systems. VibeAutomateAI aims to support this talent pool with guides that make cutting-edge practice easier to apply.

The Future Of AI In Robotics – The Path To AGI

Many teams first look at AI in robotics as a way to cut costs or raise throughput, though ongoing research published in Frontiers in Robotics and AI explores deeper questions about machine intelligence and embodied cognition. The deeper story points toward something larger. Researchers use the term Artificial General Intelligence (AGI) for systems that can understand, learn, and solve a wide range of tasks at a level similar to a person. One strong idea is that reaching this point may require giving AI a body that can move and sense the real world.

A robot that can see, hear, and touch gets constant feedback from its actions:

- When it pushes an object, the robot notices resistance.

- When it turns, the scene in the camera changes.

- When it bumps a table, the sound and motion combine into one clear lesson.

This tight loop of action and response mirrors how a small child learns basic rules about gravity, balance, and cause and effect. AI in robotics supports the same loop at machine speed and scale.

This view shifts the main question. Instead of asking only which tasks AI in robotics can handle for people, we start asking what rich streams of physical experience robots can feed into an AI mind. Projects such as MIT’s speech-to-reality work hint at a future where digital instructions and physical results sit only minutes apart. As more systems join strong models in the cloud with fleets of robots on the ground, we may see on-demand building, repair, and even creative work on a broad scale.

VibeAutomateAI tracks these long-term trends and turns them into practical insight for present-day planning. By seeing where AI in robotics may lead, leaders and technical teams can make better choices about skills, architecture, and ethics today, rather than playing catch-up later.

Conclusion on AI in Robotics

AI in robotics brings together two powerful threads. Robotics gives us the physical reach to move, grip, weld, and explore, while AI supplies pattern recognition, learning, and decision making. The market growth from 15.2 billion to a projected 111.9 billion dollars shows that this is not a passing fad but a major shift in how work gets done.

Under the surface, seven core technologies drive this shift, from machine learning and computer vision to NLP, edge computing, cobots, predictive maintenance, and digital twins. They support real gains across nine fields from agriculture and healthcare to manufacturing and logistics. None of this happens without robotics engineers who design, build, and refine these systems over time.

Looking ahead, embodied AI and steady sensory feedback may guide the field toward broader forms of intelligence, while projects like speech-to-reality hint at new ways of creating things on demand. At VibeAutomateAI, we see our role as a guide through this fast-moving space. Our practical articles and tutorials help teams use AI in robotics wisely, with an eye on both present gains and future risks. For anyone planning the next wave of automation, now is the time to study these tools and put them to careful use.

FAQs

AI in robotics raises many recurring questions, especially for teams trying to plan real projects. These short answers cover the basics and point back to key ideas in this guide.

Question One – What Is The Difference Between Robotics And Artificial Intelligence

Robotics focuses on physical machines that move and act in the real world. It covers design, construction, sensors, and control of autonomous or semi-autonomous robots. Artificial intelligence centers on software that analyzes data, spots patterns, learns, and makes decisions. When we join them, AI becomes the brain that turns simple robots into adaptive, intelligent systems.

Question Two – Which Industries Benefit Most From AI-Powered Robotics

Many sectors already see strong gains from AI in robotics. Manufacturing leads with smart factories that use industrial robots and AI vision to raise output and quality. Automotive plants use it for production and for self-driving research. Healthcare, agriculture, logistics, aerospace, food service, military work, and home devices all see faster, safer, and more precise operations from intelligent robots.

Question Three – How Does Machine Learning Improve Robot Performance

Machine learning lets robots improve through experience instead of fixed scripts. Models study logs of past motion, sensor readings, and outcomes, then adjust control settings to reduce errors and save time. Mobile robots learn better routes through busy spaces. Arms learn grip force that avoids slips or damage. Over time, AI in robotics grows more reliable as the system keeps learning from fresh data.

Question Four – What Skills Are Needed To Become A Robotics Engineer

A robotics engineer usually needs a degree in computer engineering, computer science, electrical engineering, or a related field. Strong coding skills in languages such as Python and C or C plus plus help, as do solid foundations in algorithms, control theory, and basic mechanics. Good problem solving habits and a drive to keep learning new tools matter as much as formal training. Knowledge of AI in robotics, including machine learning and computer vision, makes candidates stand out, and pay in the United States often centers around six figures for skilled staff.

Read more about How to Choose the Best Mobile Device Management Software

[…] Read more about AI in Robotics: Complete Guide You Need to Master Today […]