Introduction

Machine learning in security is essential as ransomware crews, phishing kits, and automated bots move faster than any human team can respond. It helps close the gap by detecting patterns and threats that traditional tools miss. New attack methods appear, change shape, and disappear before most systems can even add a signature, leaving many teams chasing smoke with defenses built for a quieter, slower era.

That is where machine learning in security comes in. Instead of waiting for known bad signatures, machine learning watches behavior and patterns across networks, endpoints, cloud services, and users. It spots strange activity at machine speed and surfaces what matters most. Used well, it shifts defense from “clean up after the mess” to “catch trouble early and contain it fast.”

We see this every day at VibeAutomateAI. When we help teams apply machine learning in security, the goal is never to replace analysts. The goal is to give them breathing room, better visibility, and better data. In this guide, we walk through what machine learning really means for cybersecurity, how the main types apply, where it already protects modern environments, and what it takes to deploy it safely. We also share how our human‑centric approach keeps AI as a powerful assistant, not an unchecked decision‑maker.

Key Takeaways: Machine Learning in Security Explained

- Machine learning in security can process millions of events across logs, endpoints, cloud platforms, and identities in seconds. That scale lets it flag patterns and subtle issues that humans would miss or would find only after long manual work.

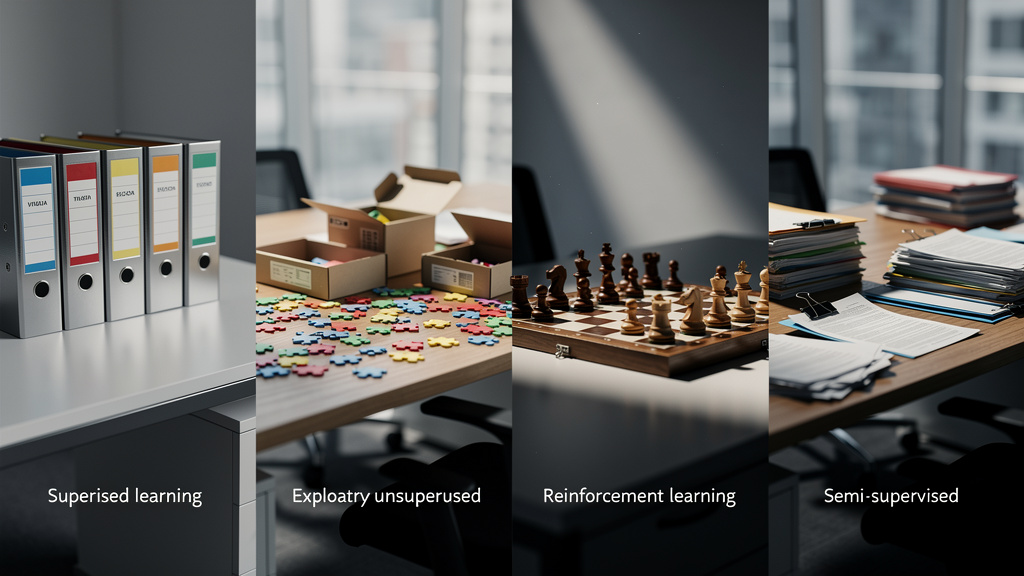

- Different approaches such as supervised, unsupervised, reinforcement, and semi‑supervised learning solve different security problems. Understanding these types helps leaders select the right products instead of buying a generic “AI” label.

- Well‑designed machine learning takes over repetitive work like triage, log review, and basic investigations. That shift frees analysts to focus on threat hunting, incident handling, and guiding security culture across the business.

- Good results depend on strong data, reasonable model choices, and ongoing human oversight. Machine learning is powerful, but it still needs people to set goals, review edge cases, and correct mistakes over time.

- VibeAutomateAI focuses on AI that supports people, with practical guidance, pre‑built models, and clear feedback loops. That approach makes advanced machine learning in security reachable for organizations of many sizes through cloud platforms and ready‑to‑use security models.

What Is Machine Learning in security and Why Does It Matter?

Machine learning is a branch of AI where systems learn from data rather than being hand‑coded for every rule, and AI and machine learning are transforming how organizations approach cybersecurity challenges. Instead of writing “if this, then that” logic for each threat, we train models with large sets of examples. Over time, the model learns patterns that help it decide whether new activity looks safe or risky.

AI is the broad idea of machines acting with some level of “intelligence.” Machine learning is the very practical side of that idea. It uses algorithms and models that adjust themselves as they see more data. In machine learning in security, those models look at network traffic, endpoint behavior, identity events, email patterns, and more to spot suspicious activity.

Modern environments generate millions of events per minute across on‑prem systems, public cloud, SaaS tools, and remote endpoints. No security team can manually review that flood of data or connect all the dots in time. Machine learning does the heavy lifting by scanning, grouping, and ranking events so analysts can focus on the highest‑value work.

Two things matter most for results:

- Data quality. Poor, noisy, or biased data leads to poor models, which can miss attacks or drown teams in false alarms.

- Fit‑for‑purpose algorithms. You need models that match the use case, such as classification for malware or clustering for odd user behavior.

At VibeAutomateAI, we treat machine learning as an assistant to human decision‑making. The best outcomes come when models handle the scale and speed, while humans apply context, common sense, and business judgment.

“Security is a process, not a product.” — Bruce Schneier

Machine learning fits into that process as another powerful set of methods, not as a one‑time purchase that solves security by itself.

Machine Learning in Security: The Four Models Changing Everything

Not all machine learning works the same way. Different learning styles fit different problems, from spotting known malware to uncovering brand‑new attack patterns, as documented in research on machine learning in cybersecurity techniques. When we guide clients through vendor choices, these four types are one of the first things we explain, because they shape how a tool will behave and where it will add the most value.

Supervised Learning: Teaching Systems To Recognize Known Threats

Supervised learning trains models on data that already has clear labels, often called ground truth. In a security context, that might be:

- Large sets of files tagged as malicious or safe

- Emails marked as phishing or legitimate

- Traffic flagged as normal or attack traffic

The model learns which features line up with each label.

During training, we feed these labeled examples to the algorithm again and again so it can learn the patterns that signal “bad” versus “good.” Common methods include Random Forest, Support Vector Machines, Logistic Regression, and Naïve Bayes. In machine learning in security, supervised learning is strong at:

- Malware classification

- Phishing detection

- DDoS prediction

- Detecting spoofing attempts

It can reach high accuracy for known threat categories, but it relies on having plenty of labeled data and can struggle with attacks that look very different from anything it has seen before.

Unsupervised Learning: Discovering Hidden Threats and Anomalies

Unsupervised learning works without labels. The model gets raw data and is asked to find natural groups, patterns, or outliers on its own. For security, that usually means building a picture of “normal” behavior across users, devices, and services, then flagging anything that sits far outside that pattern.

Techniques such as K‑means Clustering, Principal Component Analysis, and some neural network methods help group similar behavior and spotlight anomalies, with anomaly detection in cybersecurity becoming a critical defense mechanism. This style of machine learning in security shines in:

- Insider threat detection

- Spotting zero‑day exploits

- Discovering new attack patterns that do not match any known signature

At VibeAutomateAI, we rely on unsupervised methods when we help clients watch for subtle social engineering and unusual user behavior that a rule‑based system would never notice.

Reinforcement Learning: Training Systems Through Adversarial Scenarios

Reinforcement learning teaches a model through trial and error in a live or simulated environment. Instead of labels, the model gets rewards for good actions and penalties for poor ones, and its goal is to earn the highest reward over time. Security teams can treat the environment as a virtual battleground between defenders and attackers.

With methods like Deep Q Network and Deep Deterministic Policy Gradient, the system explores different responses and learns which series of steps work best. In machine learning in security, reinforcement learning appears in:

- Autonomous intrusion detection

- DDoS defense tuning

- Adversarial simulations used to test other defenses

It can learn complex, multi‑step strategies, but these projects can be demanding in terms of data, compute, and expert oversight.

Semi-Supervised Learning: Maximizing Limited Labeled Data

Semi‑supervised learning blends supervised and unsupervised methods. It starts with a small set of labeled examples plus a much larger pool of unlabeled data. The model uses the labeled records as a guide, then extends what it learns to organize and classify the rest.

This approach is helpful when labeling is slow or costly, which is common in security. It fits cases like:

- Malware and ransomware detection

- Fraud detection

- Spotting malicious bots

Techniques such as pseudo‑labeling, Label Propagation, and Consistency Regularization are common. For many security teams we support, semi‑supervised learning offers a practical path to better machine learning in security without building huge labeled datasets from scratch.

How Machine Learning in Security Supercharges Security Teams

Most leaders do not care about algorithms for their own sake. They care that analysts are exhausted, alerts keep piling up, and high‑impact incidents still slip through. When we apply machine learning in security programs, we focus on easing those pain points first.

Here are the main ways machine learning changes what security teams can do:

- Speed and scale. Models can scan billions of events across network traffic, endpoints, identity systems, and cloud logs in near real time, leveraging data analytics to revolutionize detection capabilities. That work would take human teams months, and they would still miss patterns that span many systems and time periods. With machine learning, suspicious chains of events rise to the top in minutes instead of days.

- Automation of repetitive work. Tasks like log review, initial alert triage, and basic vulnerability checks are important but repetitive. When we guide teams through automation, they often see a thirty to forty percent drop in manual administration around tasks such as security training and routine investigations. That time goes back into deeper analysis and higher‑value work.

- A force multiplier for expert talent. Instead of sifting through noisy dashboards, analysts get a short list of prioritized, high‑risk cases with supporting context. Many SOC leaders tell us that automation “gives analysts back the time to think,” which is exactly what modern defense needs.

- Better detection of new and zero‑day threats. Signature‑based tools depend on known patterns and fall short when attackers change tactics. Models built around behavior and relationships can catch strange account use, lateral movement, and unusual process chains even when the exact malware strain is new.

- A shift from reaction to prediction. By learning from historic incidents and ongoing telemetry, models can flag risky infrastructure, weak configurations, and likely targets before an attack peaks. Across hybrid cloud, SaaS, and remote work settings, this pattern‑based view offers a level of visibility that manual review simply cannot match.

Our stance at VibeAutomateAI is simple: let AI carry the data load while people steer strategy, risk decisions, and security culture.

Machine Learning in Security: Real-World Protection in Action

Machine learning in security is not just a lab concept. It already sits inside many tools that guard endpoints, networks, cloud platforms, and security operations. When we map environments for clients, we often find several existing products using ML under the hood, even if the marketing does not say so clearly.

Endpoint and Network Threat Detection

On endpoints, modern Detection and Response platforms, including specialized Machine Learning Security Platform solutions, use machine learning in two main ways:

- Static analysis looks at file features and predicts whether a file is risky before it runs.

- Behavioral analysis watches processes in real time and blocks items that act like malware, even if no hash is on any blocklist.

On the network side, models build baselines for traffic volume, connection types, and user movements. They then flag odd lateral movement, strange login locations, and patterns that resemble command‑and‑control channels. Some systems can even spot signs of malware hiding inside encrypted traffic by looking at metadata rather than breaking encryption.

Intrusion Detection and Prevention Systems use these insights to keep rules updated as attackers change tactics. VibeAutomateAI guides teams in using AI‑driven threat monitoring and pattern recognition across their security logs so they can get more from these tools.

Email Security and Social Engineering Defense

Email remains a favorite attack path, and machine learning in security plays a central role in defending it. Good filters now look at many signals at once, including headers, wording, sender history, and link reputations. This multi‑factor view helps them tell normal business messages from scams.

Natural Language Processing lets models read message text and guess intent without opening attachments. That helps catch spear phishing, vendor impersonation, and business email compromise that simple keyword checks miss.

At VibeAutomateAI, we extend this thinking beyond the inbox. Our social engineering defense work flags:

- Odd payment changes

- Strange login paths

- Message wording that feels out of character for a given contact

That blend of behavior and content matters for compliance, brand trust, and direct financial safety.

Cloud Security and User Behavior Analytics

Cloud environments change fast and involve many moving parts. Machine learning supports Cloud Security Posture Management by scanning configurations, roles, and policies across multiple clouds. It highlights risky settings, missing controls, and violations that might expose data.

User and Entity Behavior Analytics (UEBA) build a detailed baseline for every user and device. They track normal login times, locations, apps, and data access. When a sign‑in happens from two far‑apart countries within an hour, or a regular user suddenly downloads large volumes of sensitive data, the system raises a flag.

Identity and Access Management tools can tie into this view to spot stolen credentials and trigger responses such as extra checks or session cuts. VibeAutomateAI often advises clients on cloud‑native SIEM designs that feed these analytics so teams can see both cloud and on‑prem patterns in one place.

Security Operations Center (SOC) Enhancement

Inside the SOC, machine learning in security powers smarter SIEM and SOAR platforms. Models connect events that might look harmless alone but suspicious together, reduce noisy false positives, and rank incidents by likely impact so analysts know where to start.

Automation then takes over low‑risk, well‑understood steps. With our guidance, teams often let AI block known bad IPs, isolate obviously infected devices, or force re‑authentication on a suspected account without waiting for a person. Analysts stay in charge of higher‑risk moves, while threat hunting benefits from models that pull hidden patterns and related indicators of compromise out of massive log sets.

How to Measure Machine Learning in Security Performance

Security buyers hear bold claims about “near perfect detection,” but the real picture is more nuanced. To judge any machine learning in security product, it helps to understand how its model behaves on correct hits, misses, and false alarms.

Classification models, such as those for malware detection, have four basic outcomes:

- True Positive (TP): The model flags a threat and that verdict is correct.

- True Negative (TN): The model allows benign activity to pass and that choice is also correct.

- False Positive (FP): Safe activity gets flagged as malicious.

- False Negative (FN): A real threat is treated as safe and slips through defense layers.

Detection efficacy describes the balance between catching as many real threats as possible while keeping false alarms low enough that teams can act on them:

- Pushing sensitivity higher often raises both true positives and false positives.

- Pushing it lower may hide noise but also lets more real attacks through.

The “right” balance depends on the control type and the organization’s risk tolerance. A tool that blocks outbound data might accept higher false positives than a simple monitoring feed.

When we evaluate machine learning in security tools with clients, we ask vendors for clear detection rates, false positive rates, and examples of recent improvements. We also ask how their models learn from analyst feedback, so the same false positives do not recur. At VibeAutomateAI, we favor products and designs that expose enough detail to explain why the model flagged an event, because that transparency builds trust and helps teams refine their defenses over time.

Machine Learning in Security: Measuring What Really Matters

Machine learning in security is powerful, but it is not magic. We earn more trust with clients by talking about the hard parts up front than by overselling what AI can do.

“Artificial intelligence is the new electricity.” — Andrew Ng

Security teams feel that shift as more tools add ML features, but those tools still depend on solid engineering, clear goals, and good data.

Data quality and availability sit at the center of every project. If logs are incomplete, poorly labeled, or scattered across tools, the models will learn the wrong lessons. We help teams improve collection, normalize data from many sources, and where helpful rely on pre‑built models trained on wide threat intelligence so they do not start from zero. That combination reduces the “garbage in, garbage out” risk.

Resource and skill requirements also matter. Training large models and keeping them in good shape can demand heavy compute, storage, and hard‑to‑find experts. To counter this, we prefer cloud‑based platforms, managed services, and ready‑made capabilities where possible. That way, organizations can use advanced machine learning in security without hiring a full data science group.

Another challenge is explainability. Deep models can feel like a black box, which makes it hard for analysts to trust their output or justify actions to auditors. Our approach leans toward interpretable methods for security decisions whenever we can. We also keep humans in the loop for sensitive actions, so models suggest and people approve for high‑impact steps.

Adversarial attacks on ML systems are a rising concern, with specialized platforms like HiddenLayer addressing security for AI models themselves. Attackers can poison training data or craft samples meant to fool models. We address this by encouraging model hardening, monitoring for behavior drift, and adding human review layers for strange or high‑risk cases. Overfitting, underfitting, and noisy false positives are ongoing technical risks as well. We respond with broad test sets, regular retraining on new threat data, and feedback loops where analyst corrections feed back into the model.

Across all of these areas, our core message stays the same. At VibeAutomateAI we treat AI as an assistant. Machines handle volume and speed, while humans handle meaning, context, and final calls. That mix gives organizations a safer and more sustainable path to using machine learning in security.

Machine Learning in Security: Measuring What Actually Matters

Misunderstandings about AI can stop organizations from acting or push them toward bad bets. We often start client workshops by clearing up a few common myths around machine learning in security.

Myth 1: Machine learning will replace human security experts.

In real use, models handle sorting, pattern spotting, and fast reactions, while humans still provide context, creativity, and a sense of attacker intent. Our position at VibeAutomateAI is simple. AI should support analysts by taking busywork away and giving them time for strategic threat hunting and incident handling.

Myth 2: Models are infallible.

In reality, they are statistical tools that follow their training data. If that data is biased, thin, or noisy, the model will make bad calls. We always recommend continuous monitoring, regular retraining, and human validation on sensitive decisions to keep errors in check.

Myth 3: Machine learning systems are safe from attack.

In fact, they add a new attack surface. Adversaries can try to poison training sets or create inputs that mislead a model on purpose. That is why we stress defense‑in‑depth, model hardening, and security controls around the ML pipeline itself, not just around traditional systems.

Myth 4: Every security problem needs heavy machine learning automation.

Some tasks are rare, simple, or well served by basic rules or queries. Since ML can be costly, we advise focusing it on high‑value, high‑frequency problems where speed and accuracy really matter. That is where clients see clear return on investment.

Myth 5: Only large enterprises can use machine learning in security.

Thanks to cloud tools, pre‑trained models, and more accessible platforms, small and mid‑size organizations can now apply these methods as well. VibeAutomateAI designs guidance and offerings with these teams in mind, so they can tap into modern defenses without massive upfront spend.

Getting Started: Building Your Machine Learning Security Strategy With VibeAutomateAI

Once leaders understand the basics, the next question is how to put machine learning in security to work without biting off too much at once. We like to frame this as a set of clear, manageable steps.

- Step 1: Assess your current security posture and pain points.

Look for places where data volume, alert noise, or response time are out of control, such as endpoint alerts, email queues, or identity events. Rank these areas by business risk and staff burnout. VibeAutomateAI offers posture assessments that highlight where AI can bring the fastest, safest gains. - Step 2: Define success metrics and measure your starting point.

Common metrics include mean time to detect (MTTD), mean time to respond (MTTR), false positive rates, and the amount of analyst time spent per incident. Writing these numbers down before any change lets you prove impact later. We help teams pick realistic targets based on industry baselines and their current maturity, then build a clear before‑and‑after view of improvements. - Step 3: Begin with high‑impact, lower‑complexity use cases.

For many organizations, that means endpoint threat detection, email security, or behavior analytics rather than custom model development. Pre‑built models and cloud platforms shorten time to value. Our AI‑powered learning frameworks for security awareness are also a gentle entry point, using machine learning to personalize training while keeping risk low. - Step 4: Set up human‑in‑the‑loop governance.

Decide which automated actions can run without review and which need approval from an analyst or manager. Build feedback paths where analysts mark alerts as useful or noise so models can improve. VibeAutomateAI always recommends clear roles and review steps so AI supports, not overrides, human judgment. - Step 5: Plan for ongoing improvement and model care.

Threats change and so must models, so budget time for performance reviews, retraining on new data, and tuning based on lessons learned. We guide clients through long‑term ML operations, including monitoring, retraining schedules, and integration with security processes.

By following these steps with an experienced partner, organizations can move from theory to practice. VibeAutomateAI stands ready to help design, implement, and refine machine learning in security programs that fit real‑world constraints and deliver measurable gains.

Conclusion

Attackers are not slowing down, and manual defenses cannot keep up with the volume, speed, and creativity of modern threats. Machine learning in security gives defenders a way to keep pace, by scanning huge data sets, spotting subtle patterns, and reacting faster than any human alone could manage.

The real power of these methods comes when they work side by side with skilled people. Models bring scale and speed, while humans bring context, ethics, and business sense. That blend turns security from a constant race to catch up into a more proactive practice that can see trouble earlier and respond with more confidence.

At VibeAutomateAI, we focus on practical, human‑centric adoption of AI. We help teams choose the right use cases, evaluate vendor claims, improve data quality, and build feedback loops that keep models honest. As AI continues to mature, the organizations that pair it with strong security leadership will be the ones best prepared for what comes next.

If it is time to put machine learning in security to work for your organization, we invite you to connect with us. Together we can design an approach that fits your environment, supports your team, and strengthens your defenses step by step.

FAQs

Question: Can Machine Learning Detect Zero-Day Attacks That Have Never Been Seen Before?

Machine learning can improve detection of zero‑day attacks, especially when it focuses on behavior instead of known signatures. Unsupervised methods learn what “normal” looks like, then flag activity that falls far outside that pattern. That helps catch unknown malware, strange account use, or unusual network paths. No system can promise perfect coverage, so we still recommend a layered defense as described in the section on unsupervised learning.

Question: How Much Does It Cost To Implement Machine Learning Security Tools?

Costs depend on many factors, including organization size, data volume, use cases, and whether tools run on‑prem or in the cloud. Cloud‑based and SaaS security products that include machine learning in security have made advanced defenses much more reachable for smaller teams. The business case often includes lower breach risk, faster responses, and higher analyst productivity. VibeAutomateAI can walk through your environment and build a detailed cost‑benefit view before you commit.

Question: What Is The Difference Between AI and Machine Learning In Cybersecurity?

AI is the broad idea of computers acting with some form of intelligence, such as planning or perception. Machine learning is a subset that learns patterns from data to make predictions or decisions. Most “AI security” tools on the market are in fact using machine learning models. Marketing language may blur the lines, but machine learning is usually the key technology driving detection and automation.

Question: Will Machine Learning Replace My Security Team?

No, and it should not. In real deployments, machine learning in security takes over repetitive work such as log review and basic triage, while people focus on complex cases and strategy. Analysts provide context, creative thinking, and an understanding of business impact that models do not have. At VibeAutomateAI we design human‑centric systems, and many SOC leaders tell us that automation gives their teams more time, not less, to do meaningful work.

Question: How Long Does It Take To Train a Machine Learning Security Model?

The timeline varies. Training or tuning a pre‑built model for a specific environment may take hours or a few days, especially when using cloud platforms. Building and validating a new model from scratch can take weeks or months, depending on data size and goals. In practice, most organizations rely on vendor‑maintained models that are updated over time. Semi‑supervised learning can also speed things up when labeled data is limited.

Question: What Data Does Machine Learning Need To Detect Security Threats Effectively?

Effective models rely on rich, clean data from across the environment. Important sources include:

- Network traffic logs

- Endpoint telemetry

- User authentication events

- Cloud access logs

- Email metadata

The exact volume and mix of data depends on the use cases, such as malware detection or insider threat monitoring. Centralized logging and sensible retention policies help feed these models, as discussed earlier in the section on data quality and implementation challenges.

Read more about Secrets of Autonomous AI Agents That Deliver Results

[…] Read more about Machine Learning in Security: Strategies That Really Work […]