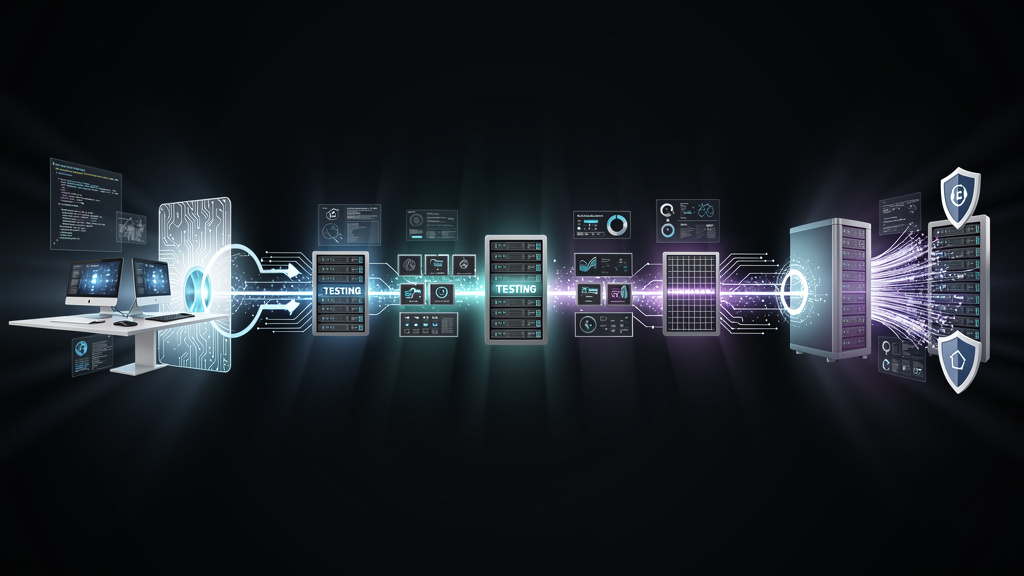

Introduction

My first serious automation deployment felt like a movie scene. I clicked the deploy button, watched the logs fly by, and waited for the magic. Instead, the automation engine crashed, half the webhooks failed, and a very angry support team pinged me faster than the alerts.

That day taught me a simple truth. Automation deployment on paper looks clean and linear, yet real environments are messy, political, and full of invisible dependencies. The diagrams in internal docs rarely match what actually runs in production, and that gap is exactly where outages live.

“Automation that only works in theory is just a demo.” — Common DevOps Saying

Since then, I have rolled out automation deployment projects across scrappy startups and regulated enterprises, mostly around n8n and AI-driven workflows, informed by current research on IoT and industrial automation trends. I have seen teams ship value fast with simple cloud setups, and I have also watched self-hosted stacks fall over because someone forgot who was on call for the database server.

In this article I share what I actually look at before any automation deployment. Not the idealized DevOps pipeline diagram, but the questions, technical checks, and hard lessons that keep production calm. I will walk through how I choose between cloud and self-hosted n8n, the hidden technical requirements, my real deployment process, and the failure patterns I now avoid on sight.

VibeAutomateAI grew straight out of these experiences. I use it to give people blunt, field-tested guidance on n8n deployment decisions, not marketing copy. If this article does its job, it will help avoid a few painful outages, shorten the learning curve, and make the next automation deployment feel a lot less like that first crash.

Key Takeaways for Automation Deployment

- Automation engines are not “just another app.” Many teams treat automation deployment as simple app hosting, yet workflow engines touch more systems, more often, which multiplies risk unless the process is planned with that in mind. A small misconfiguration that a normal app might survive can quietly break many automations at once and stay hidden until Monday morning.

- Cloud vs self-hosted n8n is a strategic choice. The choice between n8n Cloud and self-hosted n8n is not just about technical taste; it shapes data control, compliance posture, and how far custom AI work can go. Thinking only about the next month often creates painful migrations about a year later, when automation becomes business critical.

- Self-hosted brings a maintenance tax. Self-hosted automation deployment adds ongoing work around monitoring, backups, security updates, and on-call coverage. Those items rarely show up in early planning, yet they decide whether the stack feels empowering or exhausting.

- Most failures come from boring details. Many failed automation deployments break on details such as configuration management, idempotent scripts, and environment drift. Getting those basics right matters far more than picking the “coolest” stack or the flashiest cloud service.

- Simple, repeatable processes win. A straightforward, well-tested deployment process with clear smoke tests, rollback steps, and honest capacity checks beats any ornate pipeline diagram. VibeAutomateAI exists to guide that kind of practical planning for n8n, so deployment choices match real risks, not wishful thinking.

What Automation Deployment Actually Means (And Why Most Guides Get It Wrong)

When I say automation deployment, I am not just talking about pushing code or starting a Docker container. I mean putting an automation engine such as n8n into a real environment where it runs workflows that touch live customers, money, personal data, and internal systems that other teams depend on. That is a very different level of responsibility than spinning up a side project on a cheap VPS.

Textbook guides often describe deployment in neat stages: build, test, deploy, monitor. That pattern works fine for a simple web app that just serves pages. Automation deployment is different because the “product” is the set of workflows, triggers, and integrations that keep moving in the background. One broken node can quietly stall invoices, alerts, or onboarding flows without any browser error page to warn people.

Another gap I see often is confusion between build automation and deployment automation:

- Build automation compiles or packages code.

- Deployment automation moves a tested package through environments and makes it live in production.

Many teams stop after the build stage and then rely on half-manual scripts or tribal knowledge to get automations installed. That tends to work until the first serious incident.

Workflow tools such as n8n add more layers. It is not just the application binary, it is the database schema, queue settings, external webhooks, third-party APIs, and sometimes private models for AI tasks. Automation deployment has to respect all of that. Treating it as “copy some files to a server and restart” is a fast path to intermittent failures and very long debugging sessions.

On top of the technical pieces, deployment choices for automation engines carry long-term weight around data ownership, security, and flexibility. Where does workflow history live? Who can see logs? Can an auditor confirm where sensitive data flows? Can the team plug in self-hosted AI next year without ripping everything apart? Most guides mention these topics in passing; real projects live or die on them.

“You build it, you run it.” — Werner Vogels, AWS CTO

That mindset applies just as strongly to automation engines as to any other production system.

The Strategic Weight of Your Automation Deployment Choice

One client I worked with started their n8n automation deployment on a managed cloud plan because it was quick. That choice helped them move fast at first. Six months later their legal team stepped in, worried about payment data passing through an external vendor. They then had to rush into a self-hosted migration under pressure, right when the automation stack was already carrying important workloads.

That story is common. Deployment models decide more than where logs live. They define data residency, which matters a lot once finance, health, or HR teams connect their systems. If automation runs only on a vendor’s cloud region, compliance work becomes far harder than if the stack already lives inside controlled infrastructure.

There is also a link to future AI and machine learning work. Many organizations now treat custom AI models and training data as core intellectual property. A cloud-only automation deployment might look simple now, but if every workflow that touches that data must stay on infrastructure you control, the picture changes. I now ask how people expect to use AI twelve to eighteen months from now before I recommend any path.

The tempting option is usually the fastest one: click a button, get an instance, start building flows. I have learned to treat that “easy now” feeling with suspicion. The better question is how well the choice fits the company’s risk profile, data sensitivity, and growth plans. Technical preference matters, yet it should never outweigh those factors.

“Every architecture decision has a cost; you just pay it at different times.” — Senior Architect I Once Worked With

That quote sits in the back of my mind whenever I talk about automation hosting.

My Framework for Choosing Between Cloud and Self-Hosted Automation Deployment

When someone asks me whether they should run n8n in the cloud or self-host it, I never give a direct answer first. Instead, I explain that the real trade-off sits between convenience and control. Cloud gives speed, less maintenance work, and simpler upgrades. Self-hosting gives deeper control over data, network, and customization, but also shifts much more responsibility onto the team.

Over time I built a simple framework to keep those trade-offs honest. Before any automation deployment I walk through four questions:

- What is the true data sensitivity profile?

- What kind of DevOps capacity does the team really have?

- What does the eighteen-month automation roadmap look like?

- What budget and internal politics shape the decision?

Taken together, those answers point to the right hosting model more reliably than any feature list.

In practice I see a clear pattern:

- Cloud deployment shines when teams want speed to value, work with mostly internal or low-risk data, and do not yet have a strong DevOps bench. Forcing self-hosting early just burns hours on patching servers and chasing logs instead of building workflows that help the business.

- Self-hosting becomes non-negotiable when automations touch proprietary data that must stay under tight control, when workflows call into custom AI models on private infrastructure, or when regulations demand strict control over storage, access logs, and network paths.

The tricky part is honesty. I have met plenty of smart developers who said, “We will figure out the self-hosted part as we go.” A year later they were buried under maintenance tasks, with no clear owner for backups or incident response. That is why I now include a very direct skills gap check with every team.

There is also a practical migration pattern that works well:

- Start with n8n Cloud.

- Keep data sensitivity in mind from day one.

- Prove real value with a few solid automations.

- Move the stack to self-hosted n8n when volume or compliance pressure justifies the switch.

At VibeAutomateAI I recommend that path often because it lines up with how automation programs usually grow in the real world.

Question 1: What’s Your Data Sensitivity Profile?

I use a very simple classification whenever I scope an automation deployment:

- Public data – marketing content, public product data, anonymized metrics.

- Internal data – internal reports or support tickets without personal details.

- Sensitive data – names, emails, internal IDs, and anything that might cause harm if leaked.

- Regulated data – health records, payment card details, or anything covered by strict laws.

For automation workflows this mapping matters more than vague statements like “we handle sensitive data.” I want to know what flows through the engines, not just what exists elsewhere in the company. A contact enrichment workflow with public company data is very different from a triage flow that reads raw patient notes.

I once saw a “safe” reporting workflow that combined several harmless data sources. None of them looked risky alone. Together, they created a near-complete view of staff performance tied to personal emails. Suddenly the automation deployment that handled that workflow fell under stricter rules.

Before picking a deployment model, I advise teams to draw a quick diagram of every system that n8n will touch and label data levels along the way. That light data flow audit tends to reveal whether cloud is fine with solid security practices or whether self-hosting is the only realistic choice.

Question 2: What’s Your Real DevOps Capacity?

A lot of self-hosted automation deployments fail not because the tools are bad, but because the team underestimated the operational work, including metrics-driven DevOps recommendations that evidence-based management demands. When I talk about DevOps capacity, I mean more than “someone knows Docker.” I care about who sets up monitoring, who watches alerts, who handles backups, and who wakes up when the node process crashes at three in the morning.

My informal readiness checklist covers a few areas:

- Centralized logs for the automation engine, database, and reverse proxy.

- Backups that exist, have been tested, and have a restore plan with clear time limits.

- A regular schedule for OS, container, and dependency security patches, with a named owner.

- Alerting that feeds into a real on-call process instead of a shared inbox no one checks on weekends.

For n8n specifically, I often look for comfort with Docker or Kubernetes, basic database administration for Postgres, and observability tools that can track workflow failures over time. Without those skills, self-hosted n8n tends to feel fragile, even if the software itself is stable.

If I cannot identify a clear name and time for each of those responsibilities, I lean heavily toward cloud for the initial automation deployment. There is no shame in choosing managed hosting while the team builds real DevOps muscle. Making that call early avoids a lot of stress later.

Question 3: What’s Your 18-Month Automation Roadmap?

Another question I always ask is simple: Where should this automation program be in eighteen months? Many teams start with a single repetitive workflow. Over time they want AI-based routing, custom code, and tighter integration with internal systems. The deployment model that feels fine for early experiments may start to feel cramped once these goals show up.

Custom AI models change the picture fast. If the company plans to train models on internal data, run them inside private infrastructure, or treat them as a key advantage, that shifts the automation deployment into the “high control” zone. The ability to plug n8n into private AI endpoints, keep logs local, and manage data retention becomes a real requirement.

I have seen teams outgrow a light cloud setup once they moved to heavy AI and complex workflows. Migration then happens under pressure instead of as a calm project. To avoid that, I try to pick the simplest deployment model that still leaves room for the likely future. That does not mean building everything for maximum scale on day one, just avoiding options that will clearly block the next big step.

The Critical Technical Requirements for Automation Deployment Nobody Tells You About

One of my most painful automation deployment incidents started with a script that looked perfect in staging. It spun up containers, applied migrations, ran health checks, and passed every test. On production it crashed halfway through because of a tiny difference in network settings. Half the workflows pointed at stale endpoints, and we spent hours stitching things back together.

That experience pushed me to define three non-negotiable inputs for any serious automation deployment:

- Deployable packages that are built once and reused across all environments.

- Automation scripts that describe every step in plain text and can be run by any authorized engineer, not just the person who wrote them.

- Environment-specific configuration that lives outside the package, with a clear way to inject it during deployment.

All of this needs to sit in version control. That includes the n8n configuration files, infrastructure-as-code definitions, database migration scripts, and even the pipeline definitions themselves. When everything lives in Git, I can re-create an environment, roll back a bad change, or review exactly what changed during a failed automation deployment.

Idempotency is another quiet hero. A deployment script should handle being run twice without damage. If it crashes after updating environment variables but before restarting containers, a second run should not scramble anything. For automation engines that run many scheduled tasks and webhooks, this kind of safety matters a lot.

Infrastructure compatibility is a bigger issue than most people assume, especially with n8n. Different cloud providers have their own ways of handling storage, networking, and managed databases. I always check how n8n instances will talk to external APIs, where webhook traffic will land, and how private networks or VPNs fit into the picture. For self-hosted n8n, that can mean designing around Kubernetes ingress, shared Redis instances, or internal DNS.

Teams often underestimate the basics needed before automation deployment. They need:

- Stable Postgres.

- Persistent storage for workflow data.

- SSL termination and secure networking.

- Controlled access to admin interfaces.

When those pieces are fuzzy, the right answer is not to push harder on the deployment script. It is to pause and tighten the foundation first.

“If it hurts, bring the pain forward and do it more often.” — Jez Humble

Frequent, scripted deployments reveal these gaps while the blast radius is still small.

The Configuration Management Reality

From a technical point of view, the guiding principle for automation deployment is simple: build the application once, then deploy the same artifact everywhere while changing only external configuration. That pattern keeps staging and production aligned and makes weird one-off tweaks far less likely.

In practice I lean heavily on environment variables for configuration management. n8n reads most important settings from them, which keeps secrets out of images and makes updates much safer. I pair that with a secrets manager such as AWS Secrets Manager or HashiCorp Vault, so sensitive values like API keys, database passwords, and token signing keys do not sit in plain text on disk.

Different environments need different values for things like database connections, API base URLs, feature flags, and OAuth credentials for third-party tools. I keep those in clearly named files or parameter stores, rather than hand-editing values on servers. That approach makes it possible to deploy the same n8n image to staging and production while still pointing to the right backing services.

Configuration drift is a real threat. It starts as a quick manual tweak on the server during an incident and turns into mysterious behavior later. To fight that, I push all configuration into code or managed stores and treat any manual change as a temporary patch that must be folded back into version control. Tools like Ansible, Terraform, or cloud-native config services help keep machines aligned without guesswork.

My Automation Deployment Process: From Planning To Production

Over time I have settled on a six-stage process for automation deployment that I follow almost every time. It is not fancy. It just catches the failure modes I have seen most often and gives me clear checkpoints where I can stop a bad release before it harms anyone.

My stages look like this:

- Planning And Design – Map which workflows will run where, what systems they connect to, and which teams own each part. During this step I also define service level expectations so that later on, nobody is surprised when a failed automation wakes them up.

- CI And Tests – Every change to automation logic or configuration runs through automated tests, even if some are simple. For n8n that can mean unit tests around custom code nodes, contract tests for critical APIs, and at least a light end-to-end flow that fires the most important workflows in a controlled environment.

- Artifact Creation – Once tests pass, the pipeline builds a deployable artifact. For n8n that usually means a Docker image that contains the exact version of the app and custom code. The same image will go to staging and production. This is the stage where I tag releases and record what changed for later review.

- Staging Deployment – I deploy the new image, apply config for staging, and run migration scripts. Then I trigger smoke tests and manual checks from key stakeholders. The goal is to get as close as possible to real-world usage without touching live data.

- Production Deployment – If staging looks good, I schedule production deployment. I prefer clear windows during normal working hours, never late on a Friday. The production step uses the same scripts and image as staging, just with different configuration. During this stage I also keep an immediate rollback command ready, tested ahead of time.

- Monitoring And Learning – I watch error rates, latency, queue depth, and business metrics that depend on n8n workflows. Any spike or dip triggers investigation. I then write a short note about what went well and what hurt during the automation deployment, so the next run improves even if nothing broke.

In summary, my process flows through planning, building and testing, artifact creation, staging release, production release, and observation with feedback. Each stage has clear exit checks, and I resist any request to skip them for “just this one hotfix,” because that exception is often the one that goes wrong.

The Smoke Test That Saved My Deployment

One of my cleaner saves came from a simple smoke test. We had just finished an automation deployment for n8n that handled sign-up flows and billing triggers. Logs looked fine. Containers were healthy. If I had trusted only the platform metrics, I would have declared success and gone home.

Instead, I ran my standard smoke test set. That included creating a fake user, walking through the sign-up form, and checking the downstream systems that n8n was supposed to update. The account showed up, but the billing system never received its event. A small typo in an environment variable had broken a single webhook target.

For n8n, my smoke tests often check that:

- The main webhook endpoints respond as expected.

- Scheduled workflows fire on time.

- Access to key external APIs still works.

- Logs and metrics show new activity correctly.

Automated health checks help here too. Simple scripts can hit key endpoints, count failed workflow runs, and test database connectivity. When any of these fail after an automation deployment, I treat that as a red light, roll back if needed, then fix forward.

When a smoke test fails, the important part is discipline. Roll back or fix fast, and do not try to reason yourself into accepting a half-broken release. That one safeguard has saved me from silent failures more times than I like to admit.

Common Deployment Pitfalls I’ve Learned To Avoid

Over dozens of projects, a few recurring pitfalls show up so often that I now look for them on day one:

- Automating chaos. Teams take a broken manual process, wire it into n8n, and then act surprised when the chaos speeds up. Automation deployment does not fix bad workflows; it just makes them run faster and fail at higher volume.

- Tight coupling and dependency tangles. When services are too tightly bound to each other, every deployment becomes a mini-project that touches many pieces at once. I once watched a team deploy n8n, an internal API, and a front-end slice in one giant push because none of them could run with the old version of the others. A tiny regression forced a wide rollback and a long night. Since then I push hard for backward-compatible changes and clear boundaries.

- “Works on my machine” assumptions. A developer tests n8n on a laptop with different environment variables, mocked APIs, or extra access rights. In production, those assumptions fall apart. This is why I insist on staging that mirrors production as closely as budgets allow.

- Security shortcuts. Hardcoded API keys in config files, admin panels exposed on the internet without proper access rules, or shared logins for the automation engine all show up more often than people like to admit. Automation deployment multiplies those risks because a single compromise can touch many systems.

- Missing documentation. I have been pulled into incidents where nobody could explain how n8n was deployed, which scripts did what, or where to find the current configuration. When the only manual is a Slack thread from six months ago, outages drag on far longer than needed.

- Weak testing. Deployment automation without serious test automation is a bad mix. For n8n, even a small set of contract tests around key workflows and mock calls to external APIs makes a big difference. At VibeAutomateAI I now flag any automation deployment plan that lacks clear tests as high risk, no matter how experienced the team is.

“The only way to move fast is to move safely.” — Common SRE Principle

Most of these pitfalls are about safety nets that were never built.

How VibeAutomateAI Approaches n8n Deployment Decisions

VibeAutomateAI grew from exactly the kind of painful automation deployment stories I have been sharing. I wanted a place where people could get straight answers about n8n Cloud versus self-hosted n8n, backed by real projects rather than generic vendor claims. So the approach I use with clients is methodical, blunt, and very focused on real risk.

When I help a team, I start with a deployment consultation that maps:

- Current infrastructure (AWS, Google Cloud, Azure, DigitalOcean, on-prem hardware, or hybrids).

- Data classes and sensitivity levels that workflows will touch.

- Expected scale for workflows, triggers, and API calls.

The goal is to fit automation deployment into what already works instead of forcing a shiny new stack.

From there I apply a structured decision framework. I walk through the same questions from earlier about data sensitivity, DevOps capacity, and automation roadmap. Together we explore what cloud would look like with strong security controls, and what self-hosting would demand in operational effort and skill.

VibeAutomateAI also maintains practical playbooks for both n8n Cloud and self-hosted installs. These include step-by-step guides for initial setup, configuration patterns, monitoring hooks, and common extension work such as custom code nodes or community packages. When someone wants to run n8n behind VPNs or inside private networks, I help design the right mix of ingress rules, proxies, and identity controls.

A growing part of the work involves AI automation, where research shows AI shapes productivity and quality in business implementations. Many teams want to add AI models that handle classification, routing, or content generation inside workflows. I advise on how different deployment models affect data privacy here, and when self-hosted models or private endpoints make more sense.

Under all of this sits a simple philosophy: the best automation deployment choice is the one that lines up with the organization’s risk tolerance, skills, and priorities — not the choice that looks most impressive in a slide deck. VibeAutomateAI exists to make that match clear, even when the answer is “stick with cloud for now and revisit self-hosting later.”

Conclusion

After enough projects, a pattern appears. Automation deployment is never just a technical task. It is a strategic decision about who controls data, who carries operational risk, and how far future AI work can go. Treating it as “just another app deploy” is how outages, rushed migrations, and compliance headaches start.

Starting simple is not a weakness. In many cases, n8n Cloud with solid security and good process beats a half-baked self-hosted stack. As needs grow, a careful move to self-hosted n8n can make sense, but only when the team truly understands the maintenance and on-call load that comes with it.

The most successful automation deployments I have seen share a few traits: honest assessment of skills and capacity, respect for configuration management and version control, clear smoke tests and rollback steps, and a mindset that treats deployment automation as an ongoing cycle of small improvements, not a one-time project.

If automation feels new, the next step might be a small cloud deployment with one or two high-value workflows. For teams already deep into automation, the next step may be a skills audit or a review of current deployment scripts. In both cases, VibeAutomateAI is there with candid guides and hands-on help for n8n deployment choices.

With the right questions, a solid process, and a clear view of risks, automation deployment stops feeling like rolling the dice and starts feeling like a controlled upgrade to how the business runs.

FAQs

Question: Should I Start With n8n Cloud Or Self-Hosted n8n For My First Automation Project?

For a first automation project, I almost always recommend starting with n8n Cloud. Most teams have limited DevOps capacity at the beginning, and managed hosting removes a lot of early friction so they can focus on building workflows and proving value. Self-hosted n8n from day one only makes sense when strict compliance rules, private networks, or data residency demands clearly rule out cloud. The good news is that migration from cloud to self-hosted is very possible once automation becomes important enough to justify that move. The decision framework in this article mirrors how I guide that choice.

Question: What Technical Skills Does My Team Need To Self-Host n8n Successfully?

A reliable self-hosted n8n setup requires more than basic server access. Someone on the team should be comfortable with Docker or Kubernetes, Postgres administration, and setting up monitoring and alerting for both the application and the database. There also needs to be a clear on-call owner who can react to incidents, apply security updates, and handle restarts without guesswork. Backup creation and restore practice are essential, not optional extras. If those skills and responsibilities are not present yet, starting with n8n Cloud is usually the safer and more realistic option.

Question: How Do I Handle Sensitive Data In Cloud-Based Automation Deployments?

Handling sensitive data in cloud-based automation starts with understanding data flow through every workflow. I pay attention to which fields travel through n8n, how they are stored, and which third parties receive them. Strong encryption in transit and at rest is a must, along with proper secrets management for API keys and credentials. In some cases, careful scoping and masking make cloud usage acceptable even for sensitive data, especially when the provider supports frameworks such as HIPAA, GDPR, or SOC 2. When requirements demand strict control over storage or network paths, that is when self-hosted n8n often becomes the right call.

Question: What’s The Real Cost Difference Between n8n Cloud And Self-Hosted Deployment?

The visible costs are easy to compare. n8n Cloud comes with a subscription fee, while self-hosted n8n requires paying for servers, storage, and bandwidth. The less obvious costs live in staff time spent on installation, monitoring, patching, backups, and on-call work. A fair comparison adds all of that into a total cost of ownership view over at least a year. As automation usage grows, self-hosting can become cheaper at scale, especially when teams already run strong DevOps practices. On the other hand, the opportunity cost of pulling engineers into infrastructure work instead of product features often tips early stages in favor of cloud.

Read more about Automation Maintenance: What You Need for Reliable Workflows

Stay connected